Content

ControlNet: The Complete Guide

Table of contents

What is ControlNet?

Setting up ControlNet: A step-by-step tutorial on getting started.

Exploring ControlNet Models: Covering models supported by Odyssey and other popular ones.

Understanding ControlNet Settings: How different settings impact ControlNet's output.

Workflows: Various use cases for integrating ControlNet with Odyssey.

What is ControlNet?

ControlNet is a revolutionary method that allows users to control specific parts of an image influenced by diffusion models, particularly Stable Diffusion. Technically, ControlNet is a neural network structure that allows you to control diffusion models by adding extra conditions. If you're just getting started with Stable Diffusion, ControlNet makes it so that instead of generating an image based on a vague prompt, you're able to provide the model with precise inputs, ensuring the output aligns closely with your vision.

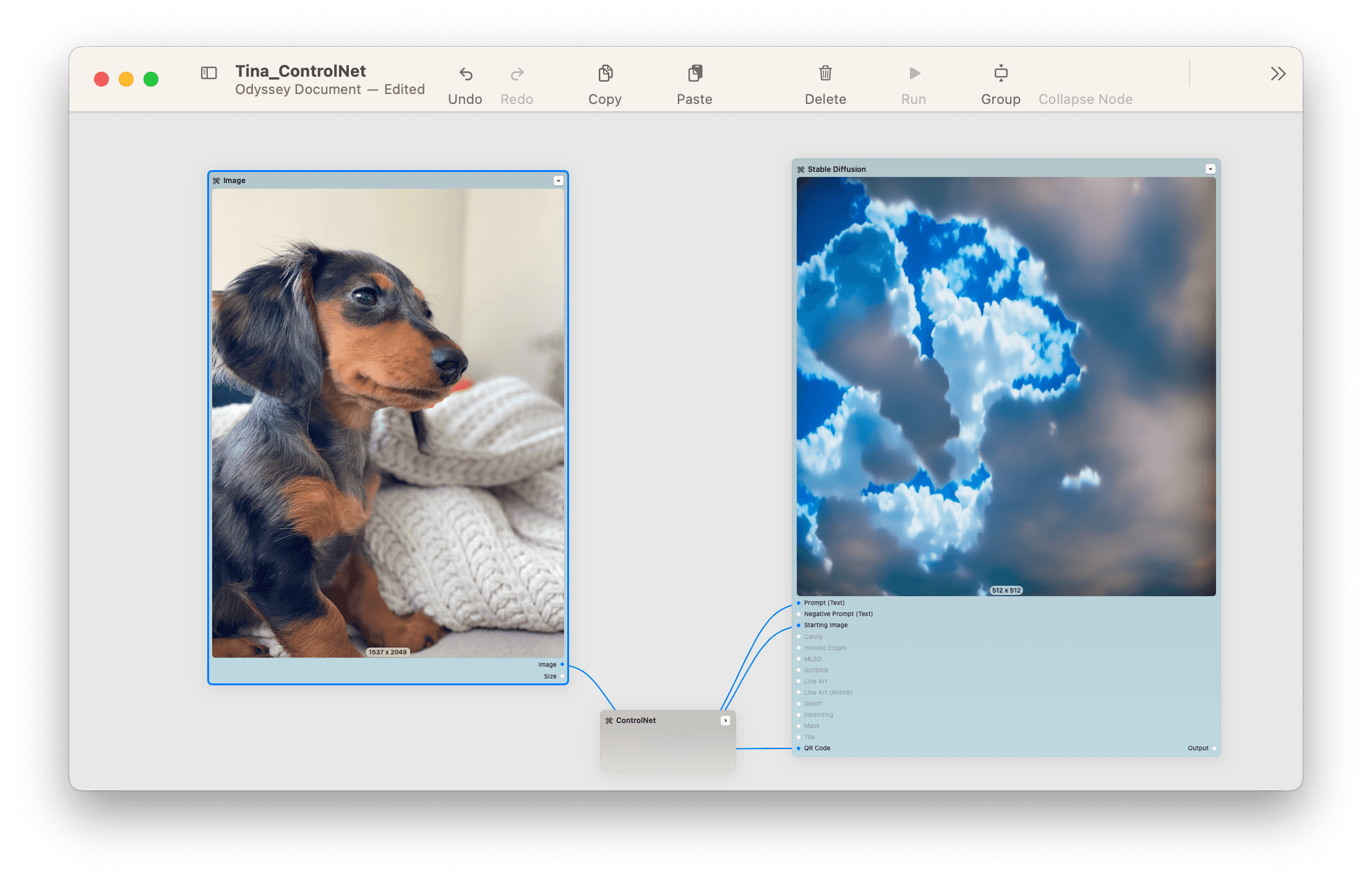

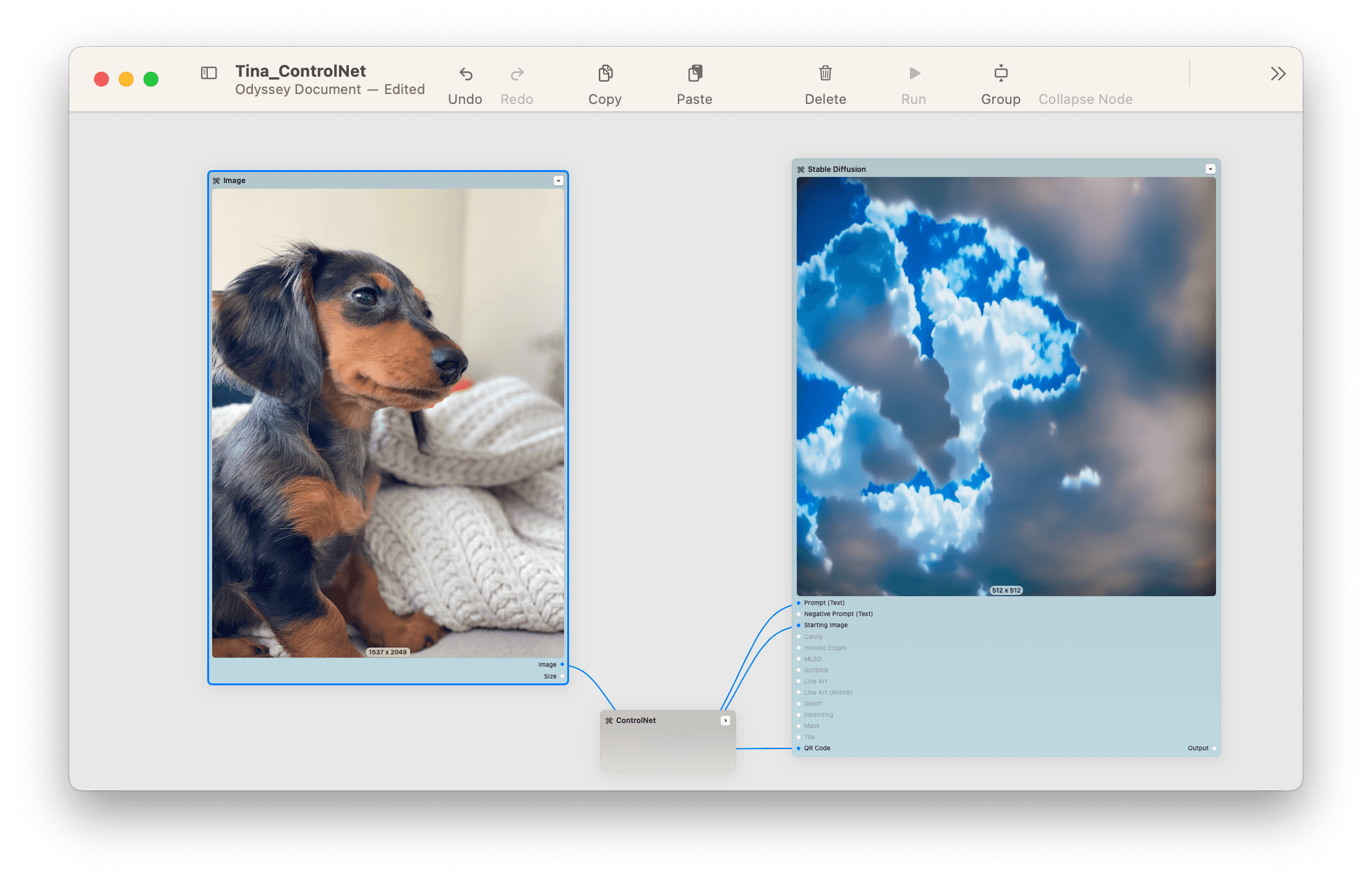

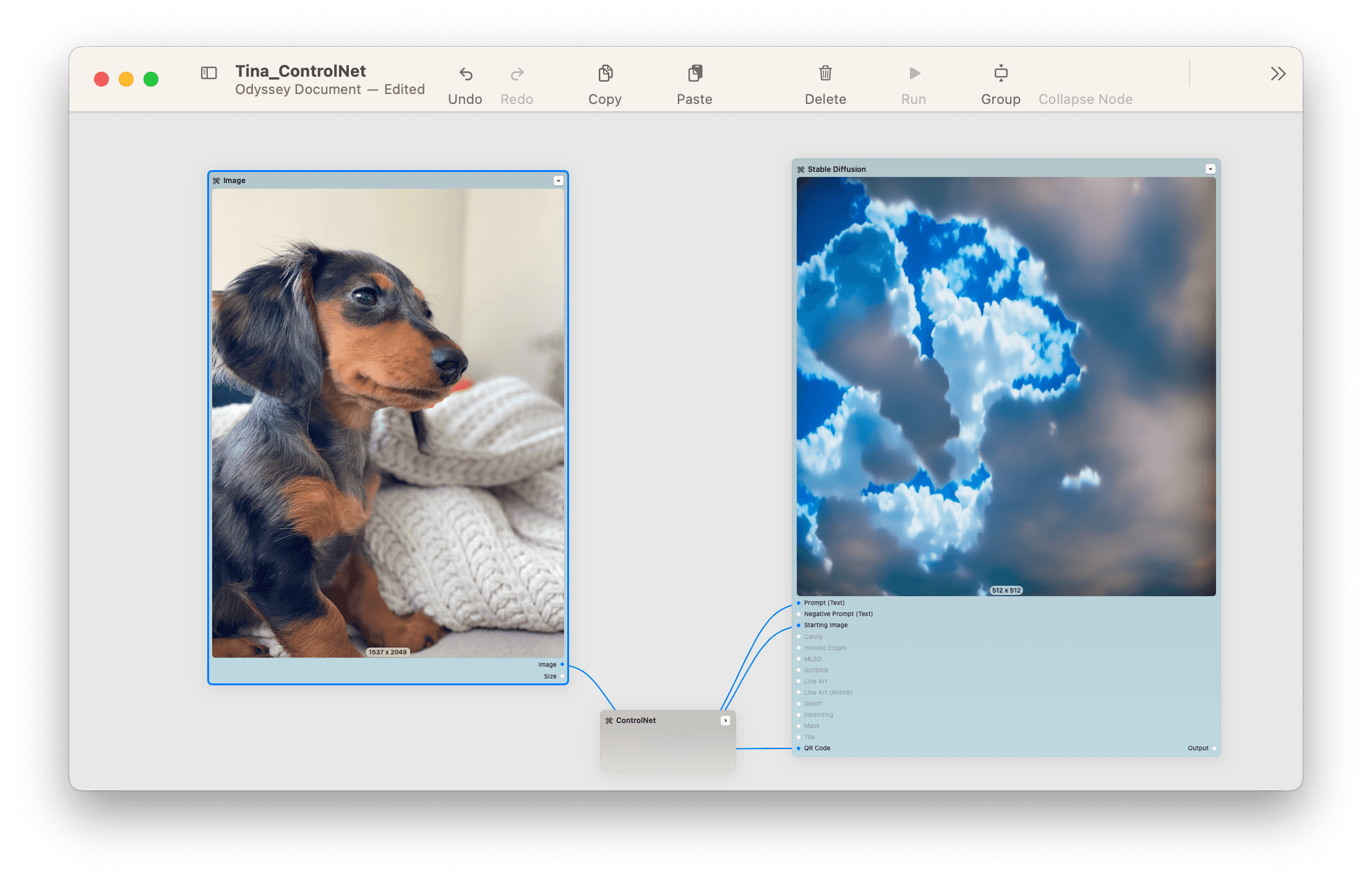

For instance, imagine feeding your Stable Diffusion model a prompt like "photo of a blue sky with white fluffy clouds." Without ControlNet, the generated image might be a random sky with arbitrarily oriented clouds. But with ControlNet, you can craft a more specific image within those clouds.

Our primary focus will be on ControlNet 1.1 for Stable Diffusion V1.5. Stay tuned for our upcoming guide on SDXL ControlNet options for Odyssey.

Getting Started with ControlNet

For Mac Users:

We might be biased but we think Odyssey is the most user-friendly platform for Mac users to begin their ControlNet journey.

Simply launch Odyssey and select the models you wish to download, such as canny edges and Monster QR. You can always expand your collection by visiting the download section. Connecting the ControlNet node to the appropriate parameter on your Stable Diffusion node is the next step. We'll delve deeper into each model's unique inputs in the subsequent section. For those seeking an advanced experience, we'll be comparing Odyssey to Automatic1111 and ComfyUI in a separate guide.

For Windows Users:

Currently, Odyssey supports only Mac. While some WebUIs are compatible with ControlNet, we can't really recommend them. The tools are often slow or charge too much to run the models in the cloud. Assuming you have enough computing power, running models locally means you have control over both the costs and your generations. We recommend exploring Automatic1111 or ComfyUI for Windows users. Or switching to Mac 🙃

Diving into ControlNet Models

Odyssey offers a wide range of models. Let's take a look at each one we offer.

Canny Edges

This model employs the Canny edge-detection algorithm, highlighting the edges in an image. It's particularly effective for structured objects and poses, even detailing facial features like wrinkles. If you're new to Stable Diffusion, starting with Canny Edges is a great way to familiarize yourself with what ControlNet is capable of.

Here's an example of a workflow where we engrained text within the image. In this example, we took an image of the word 'Odyssey,' ran it through Canny Edges, then fed it into Stable Diffusion.

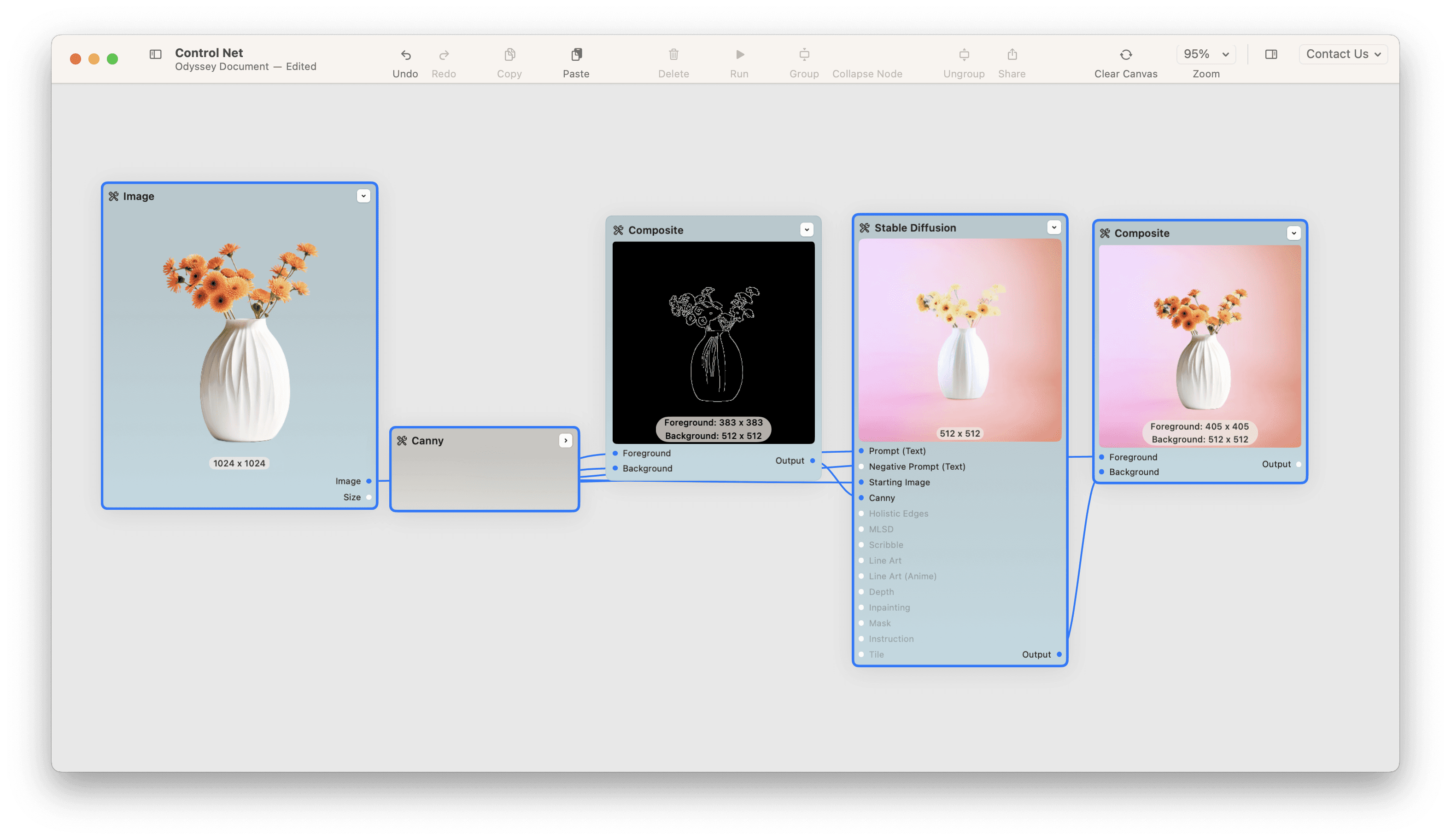

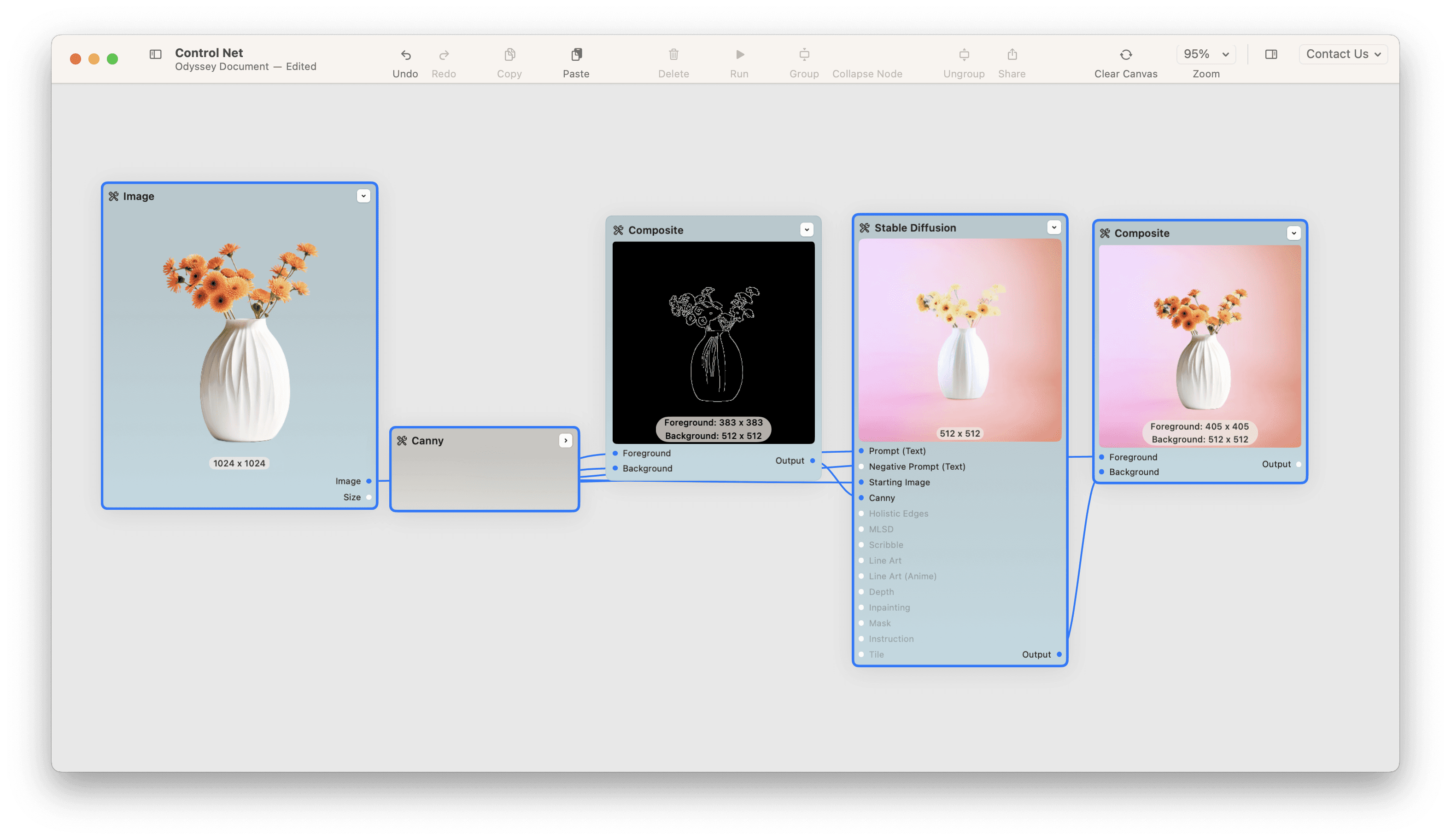

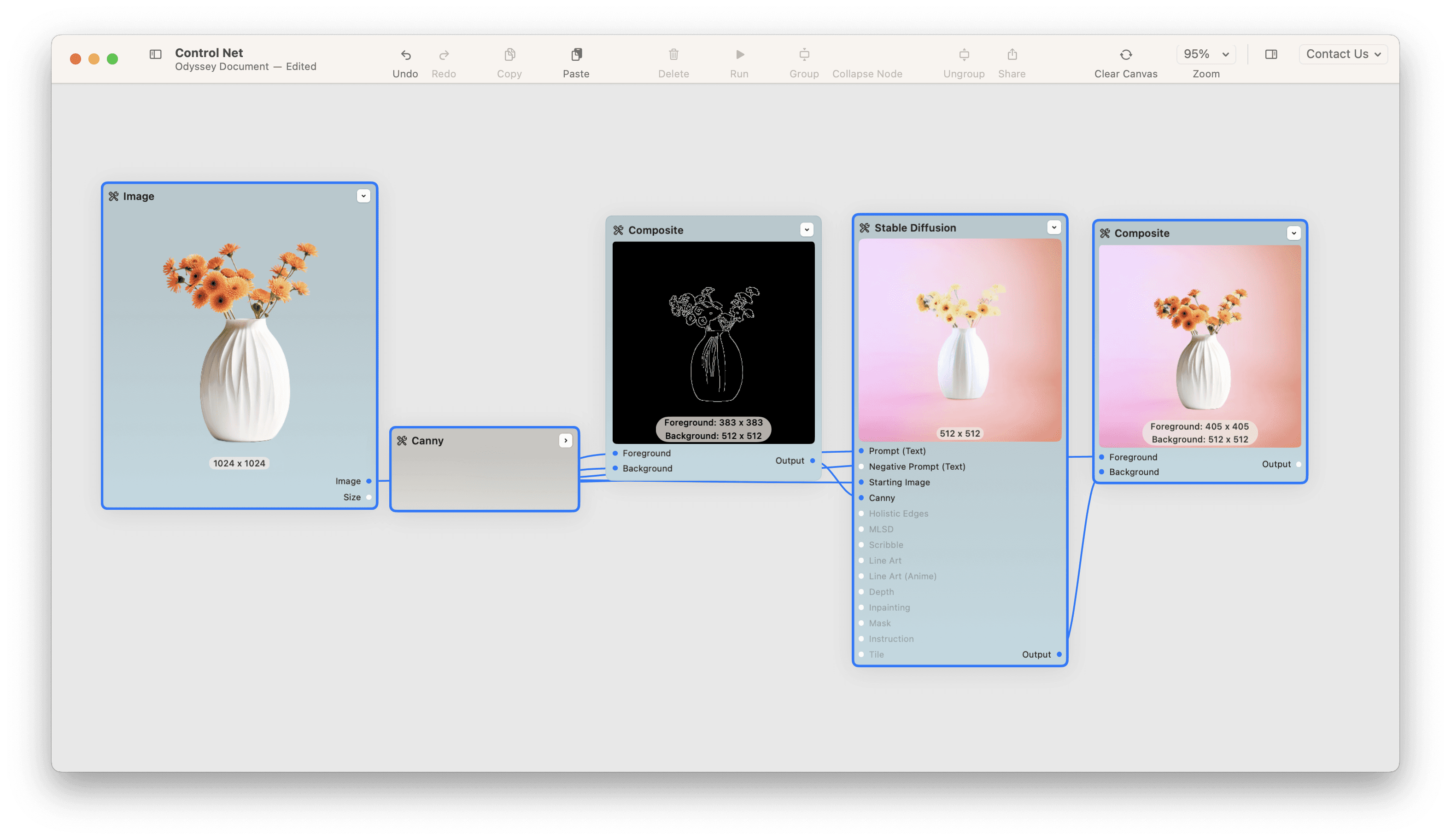

Next, here's an AI product photography workflow where we took an image of a vase, ran it through Canny Edges, then generated a new vase with the same shape onto a gradient background. This allowed us to them place the initial vase photograph on top of the generated one - giving us a realistic photography workflow.

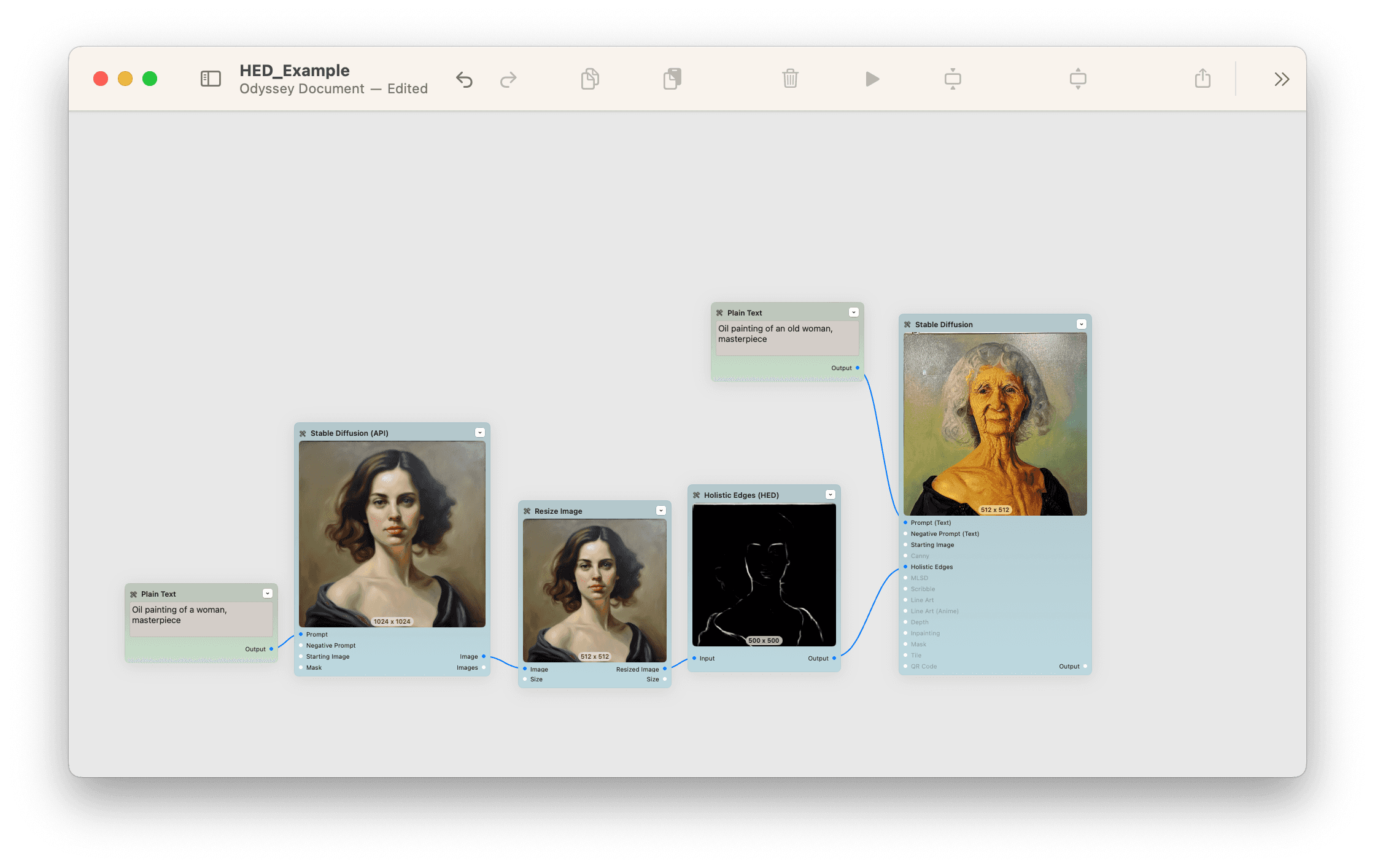

Holistic Edges

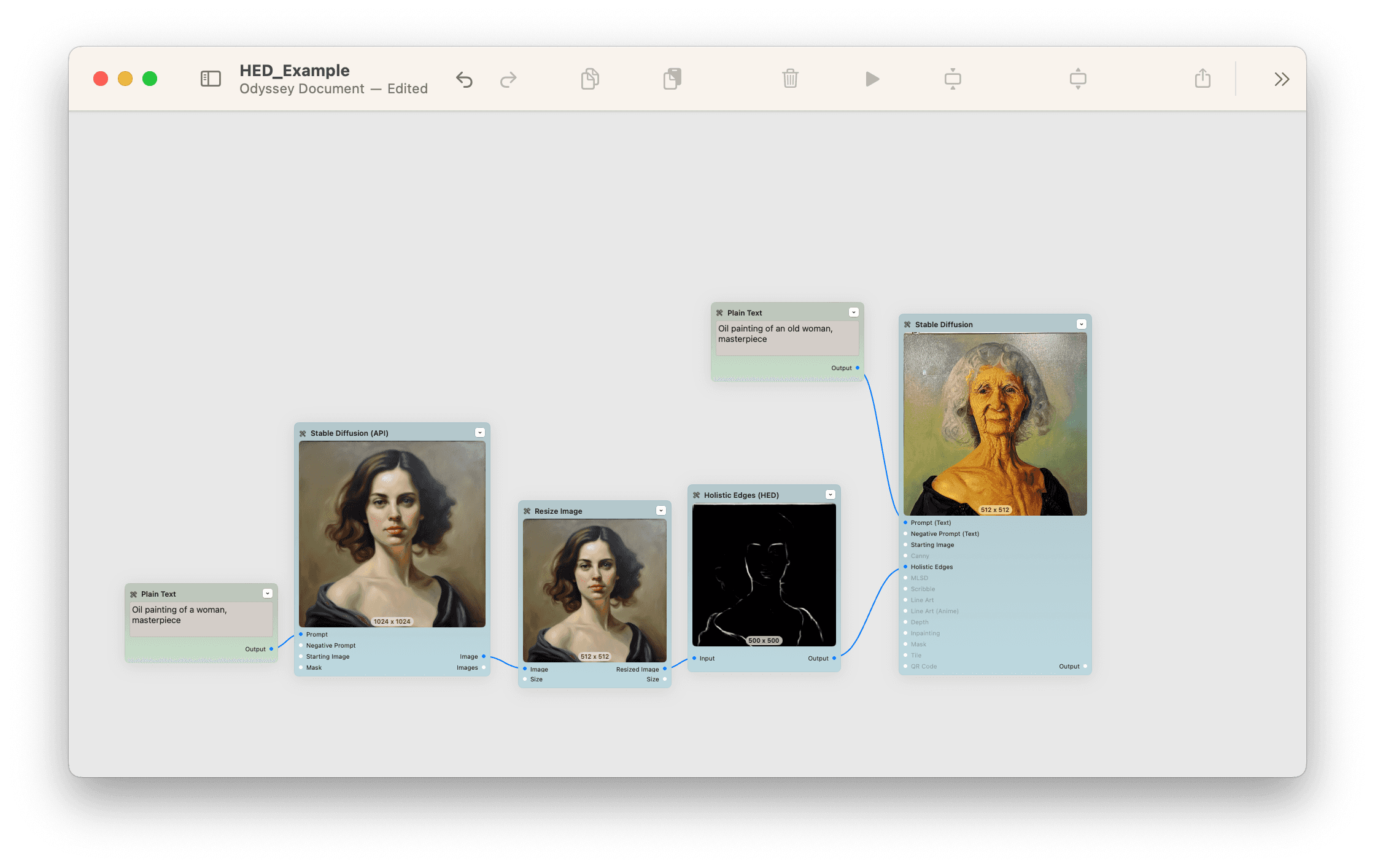

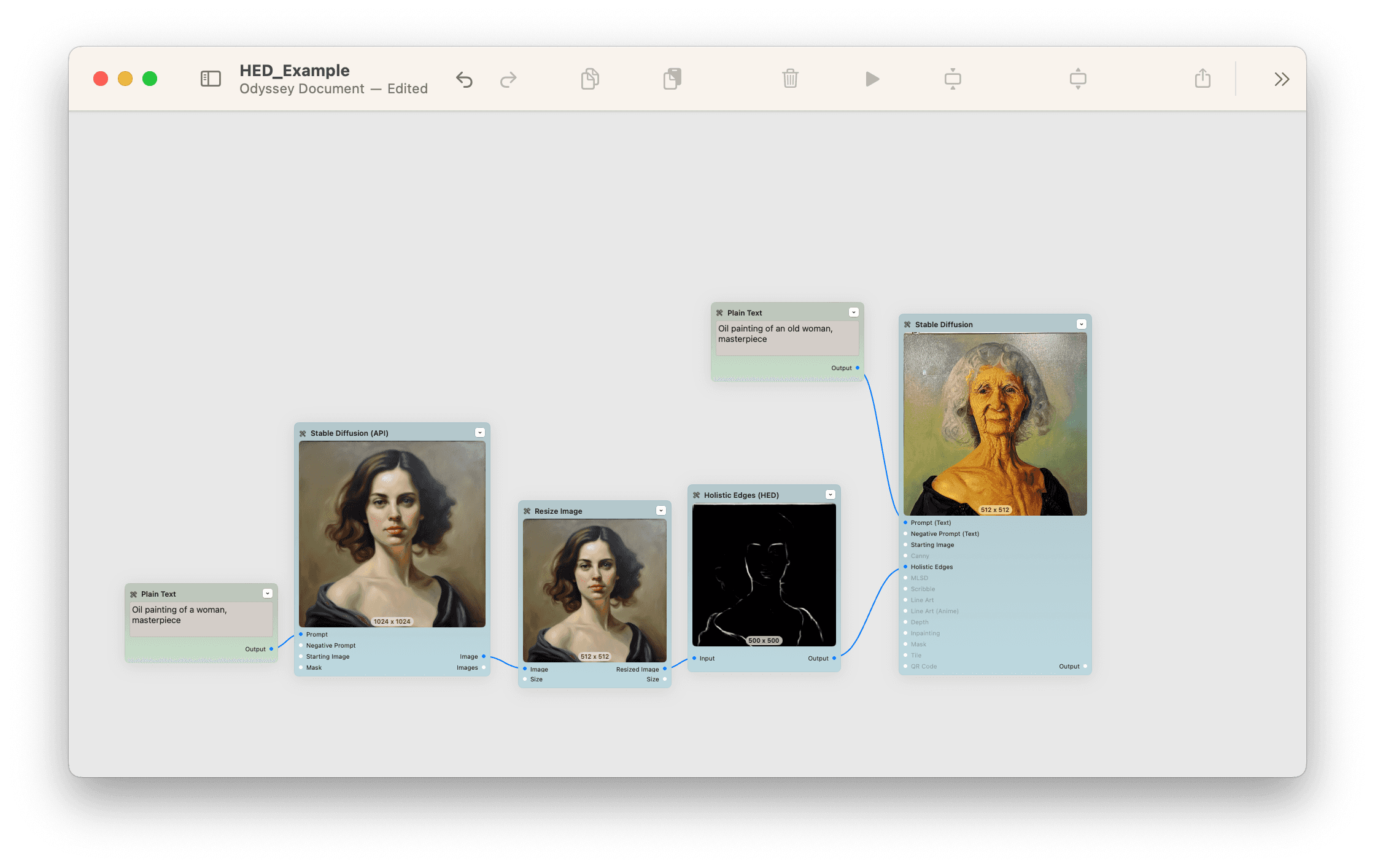

This model uses holistically nested edge detection, producing images with softer outlines. It's adept at preserving intricate details, though it might require some adjustments for consistent results. This is especially good for keeping a person's pose and then altering their appearance slightly.

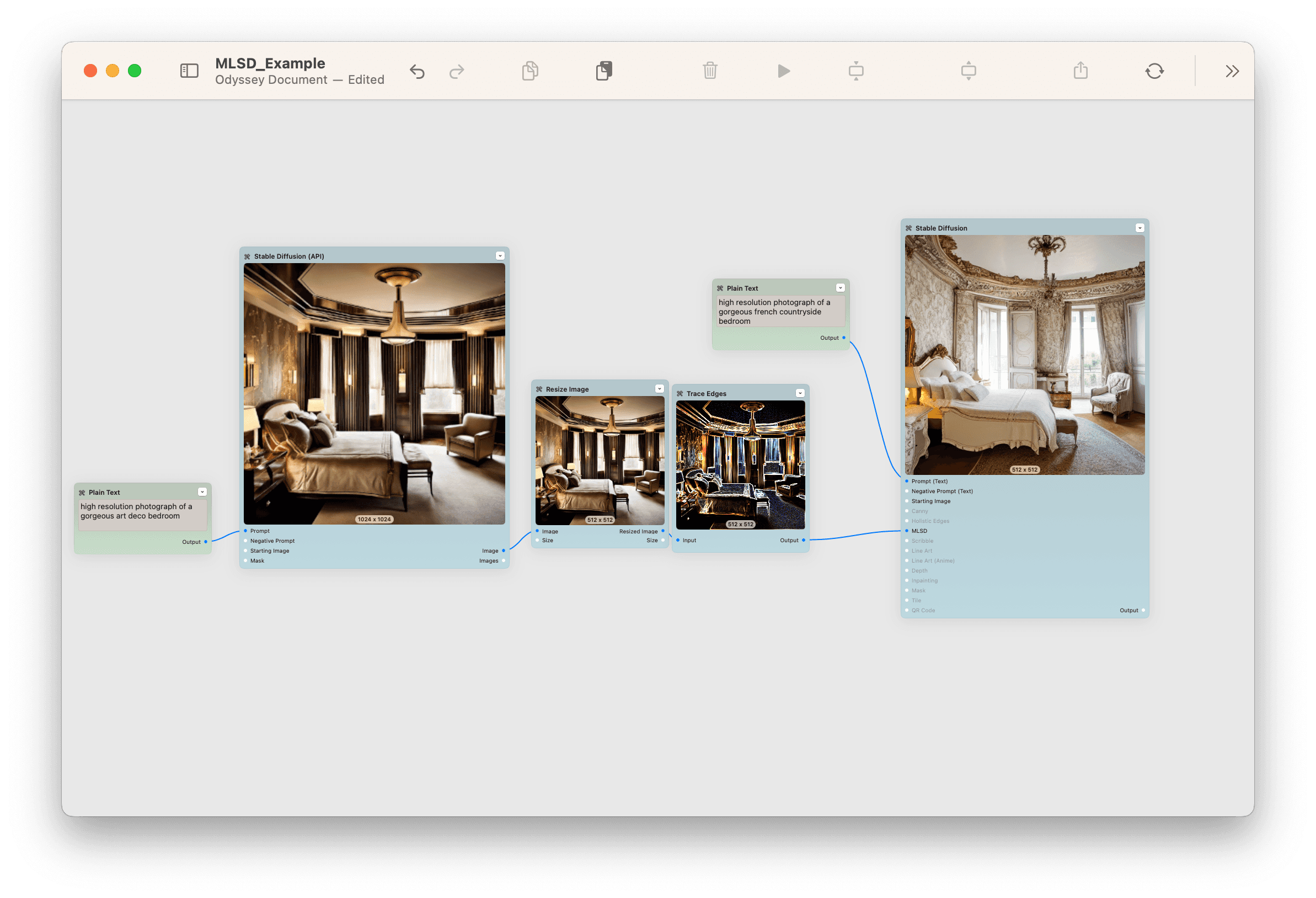

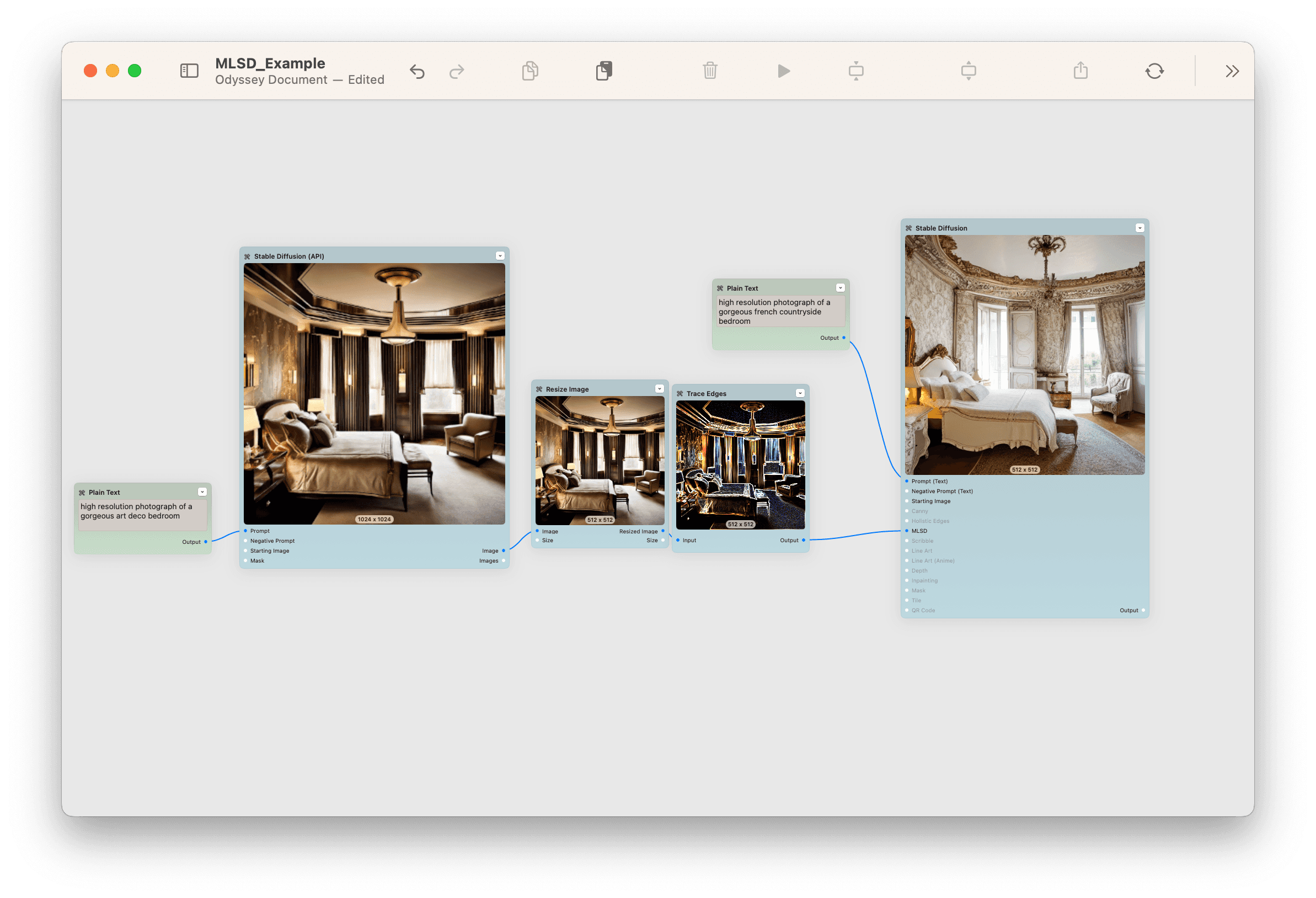

MLSD / Trace Edges

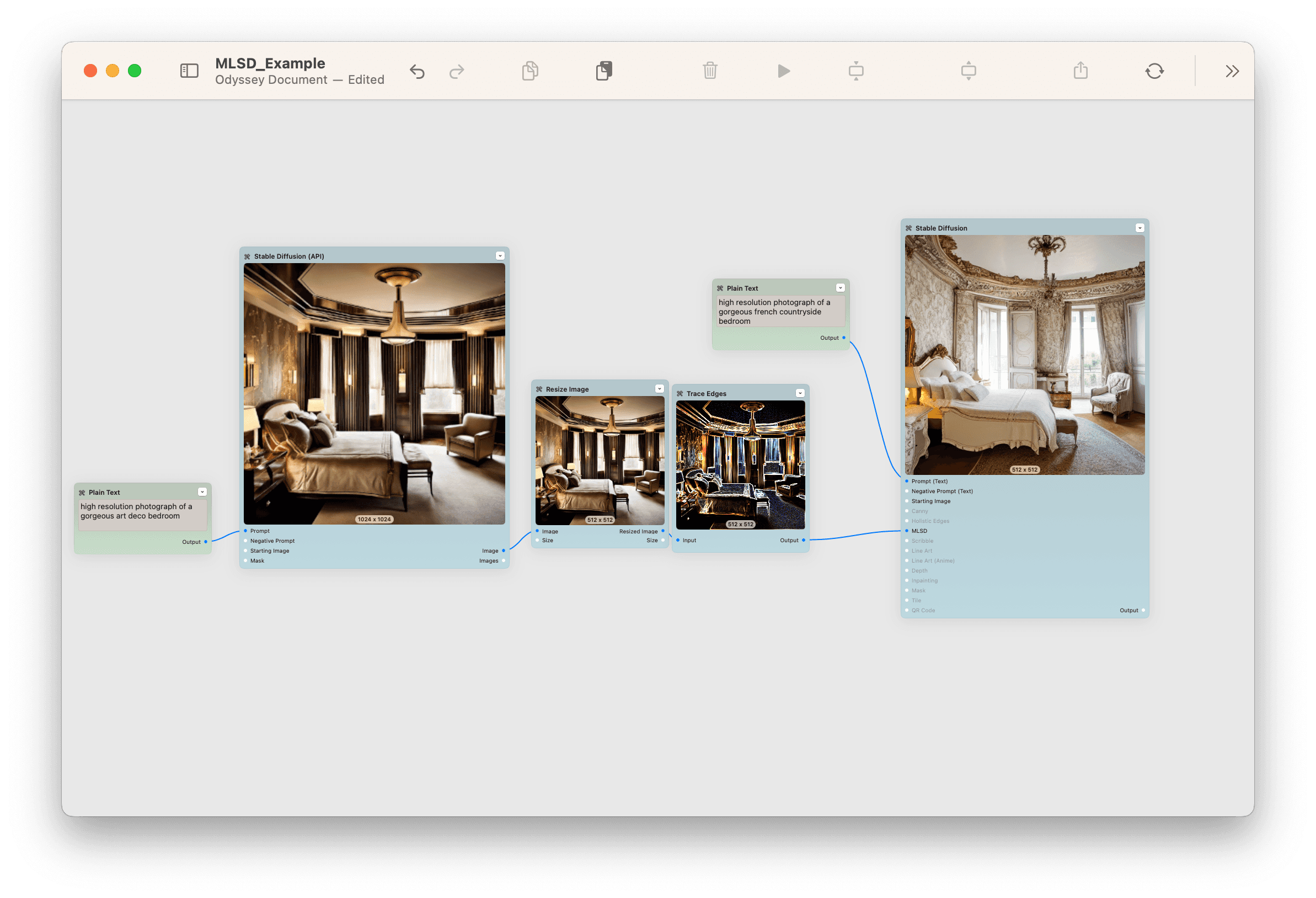

Corresponding with Odyssey’s “trace edges” node, this model retains the image’s color while drawing its edges. It's especially useful for identifying straight lines and edges. This is especially good for interiors and rooms.

Depth

This model utilizes a depth map node, producing a grayscale image that depicts the distance of objects from the camera. It's an enhanced version of image-to-image, allowing users to modify the style of an image while retaining its general composition.

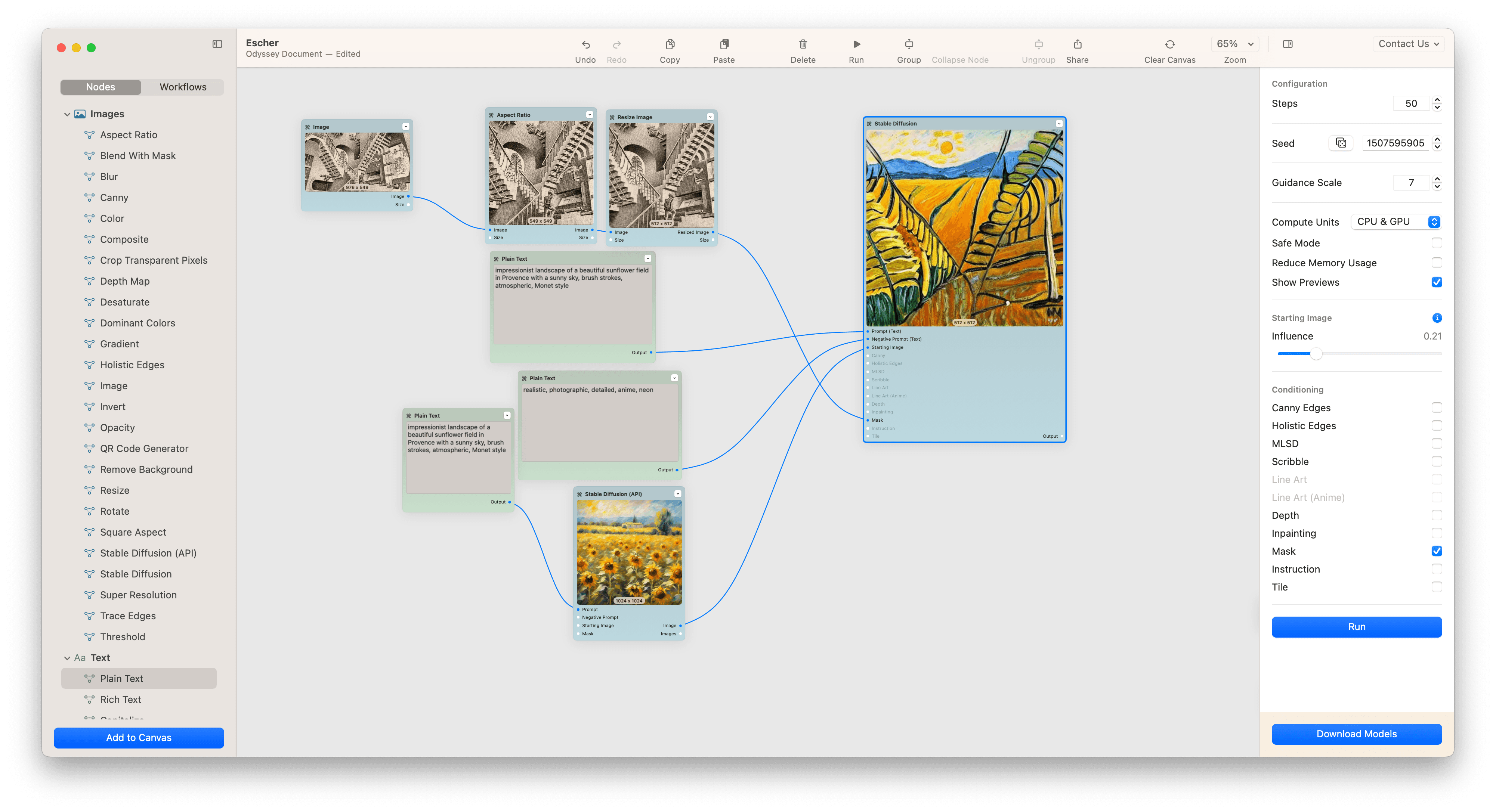

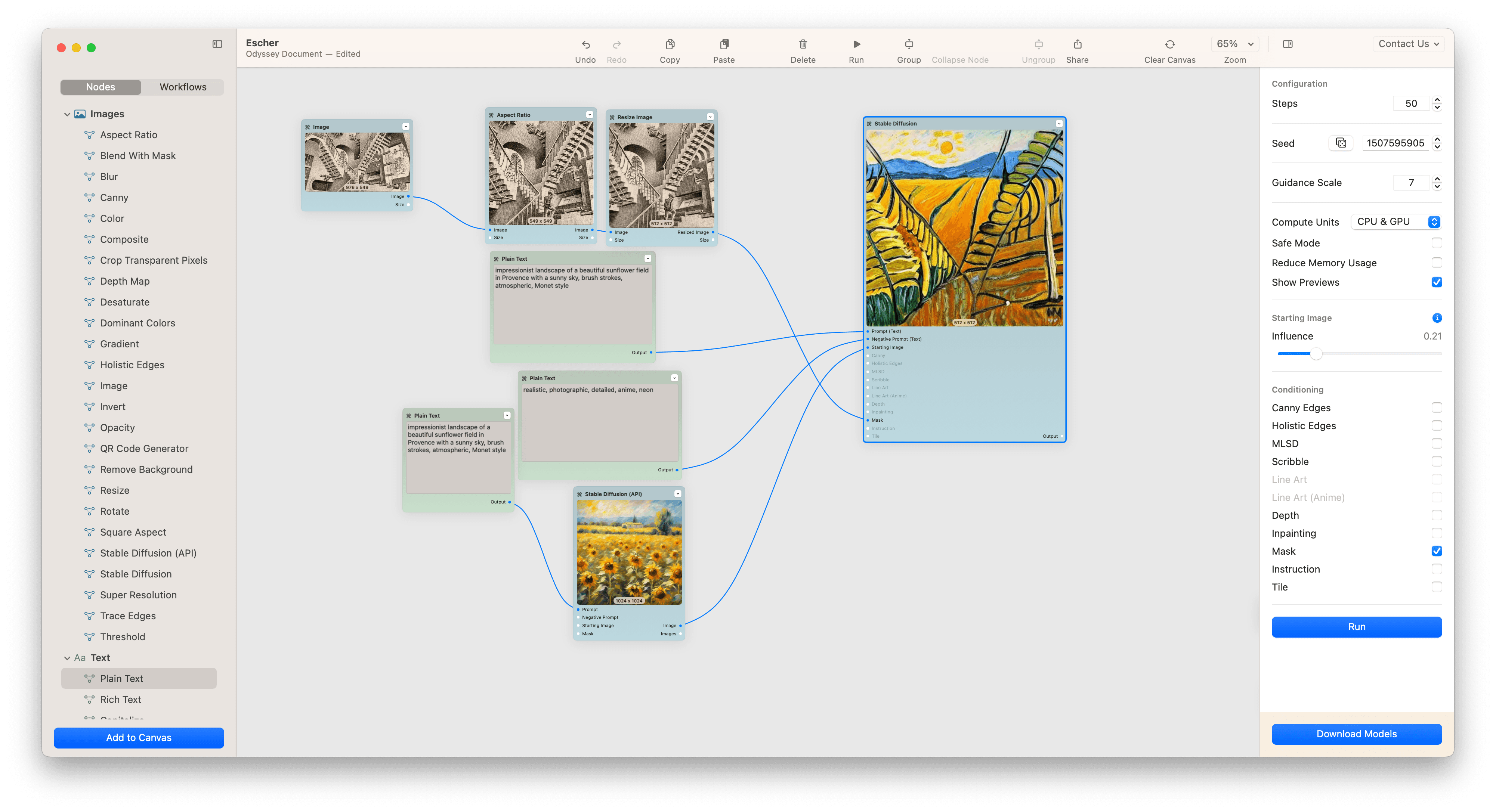

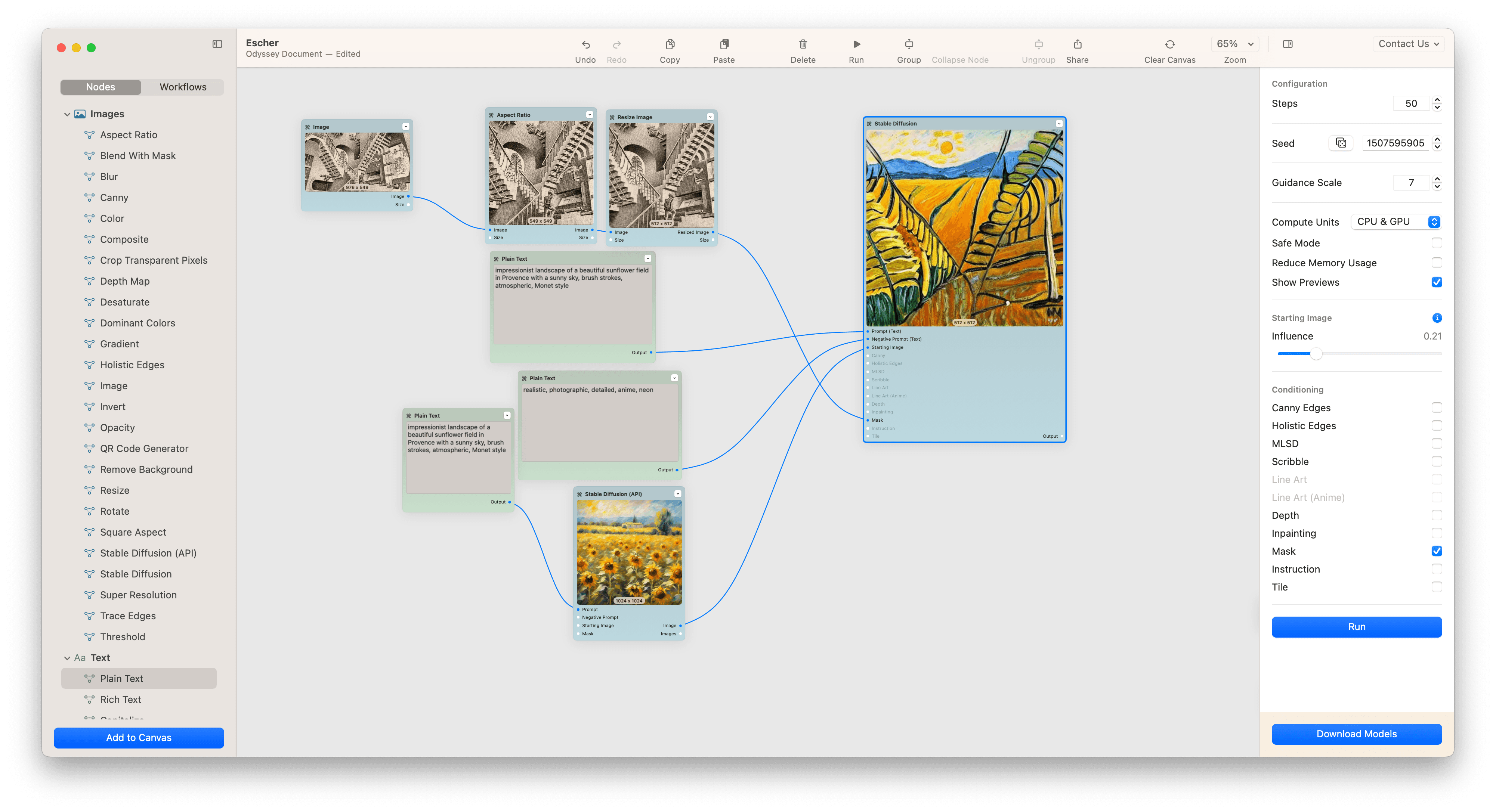

Mask

This model segments the background from the main subject, ensuring the output from Stable Diffusion remains consistent. We typically use Mask as a way to augment other ControlNet models since it does an excellent job of reinforcing to the model what needs to remain in the final generation. In this example, we used img2img to get the impressionistic sunflower landscape and then Mask to follow the same pattern as the initial M.C. Escher drawing.

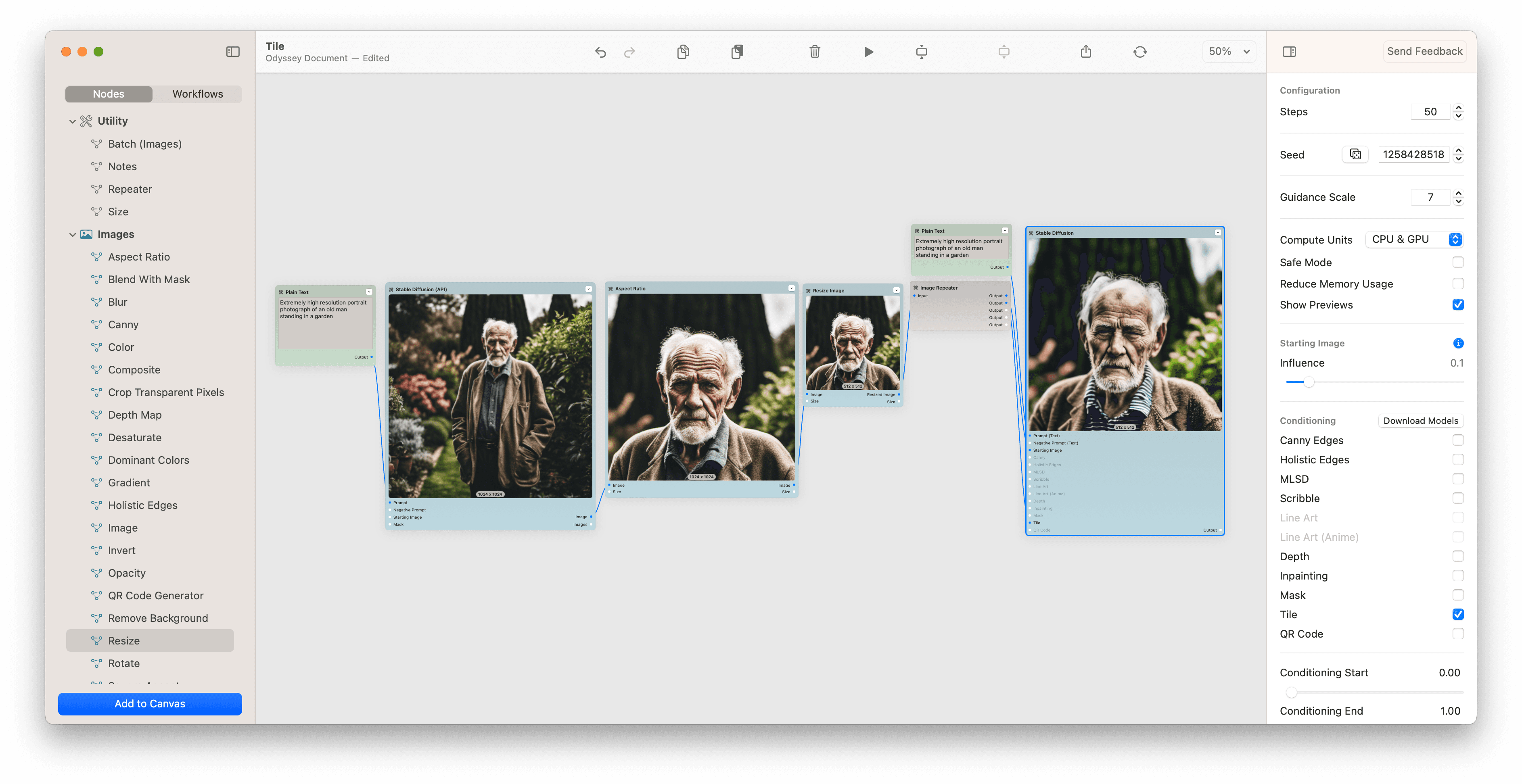

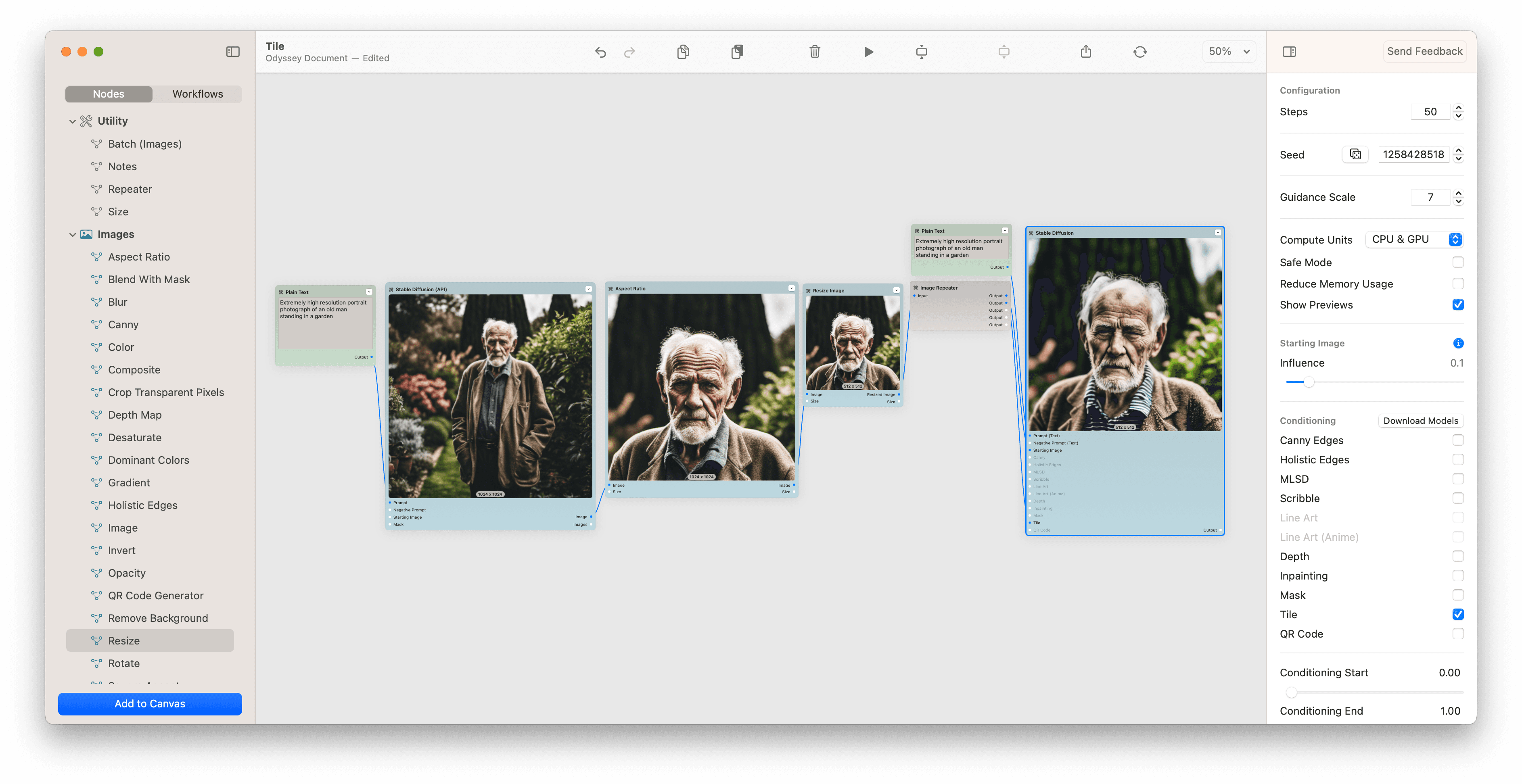

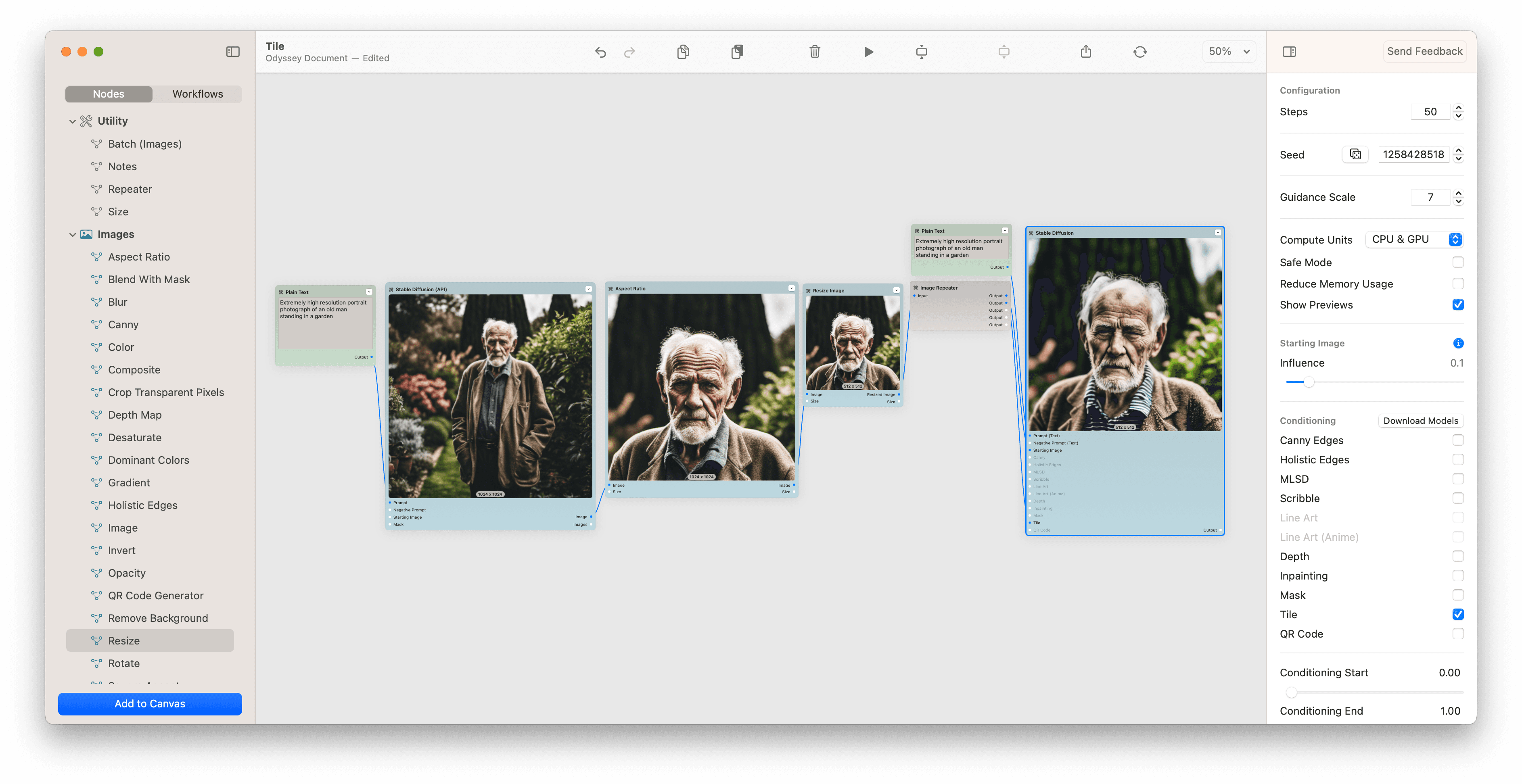

Tile

Ideal for images lacking detail, the Tile model adds intricate details to AI-generated images. Tile works by basically filling in the gaps of a lower resolution image. These details are often "created" - meaning that Tile will add information into the image that causes it to diverge from the initial input. We have a lot planned for Tile so stay tuned - but here's an example of the capability now.

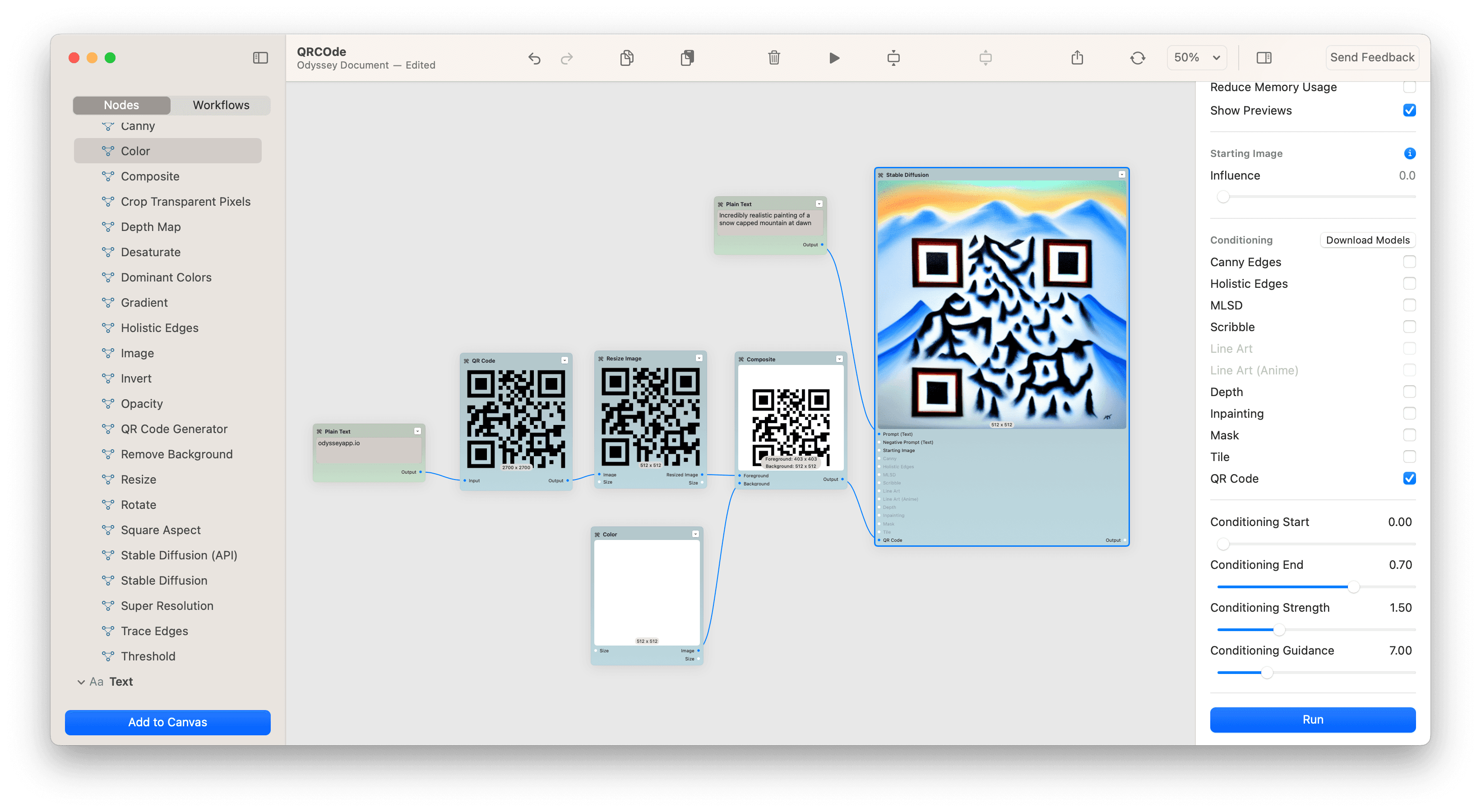

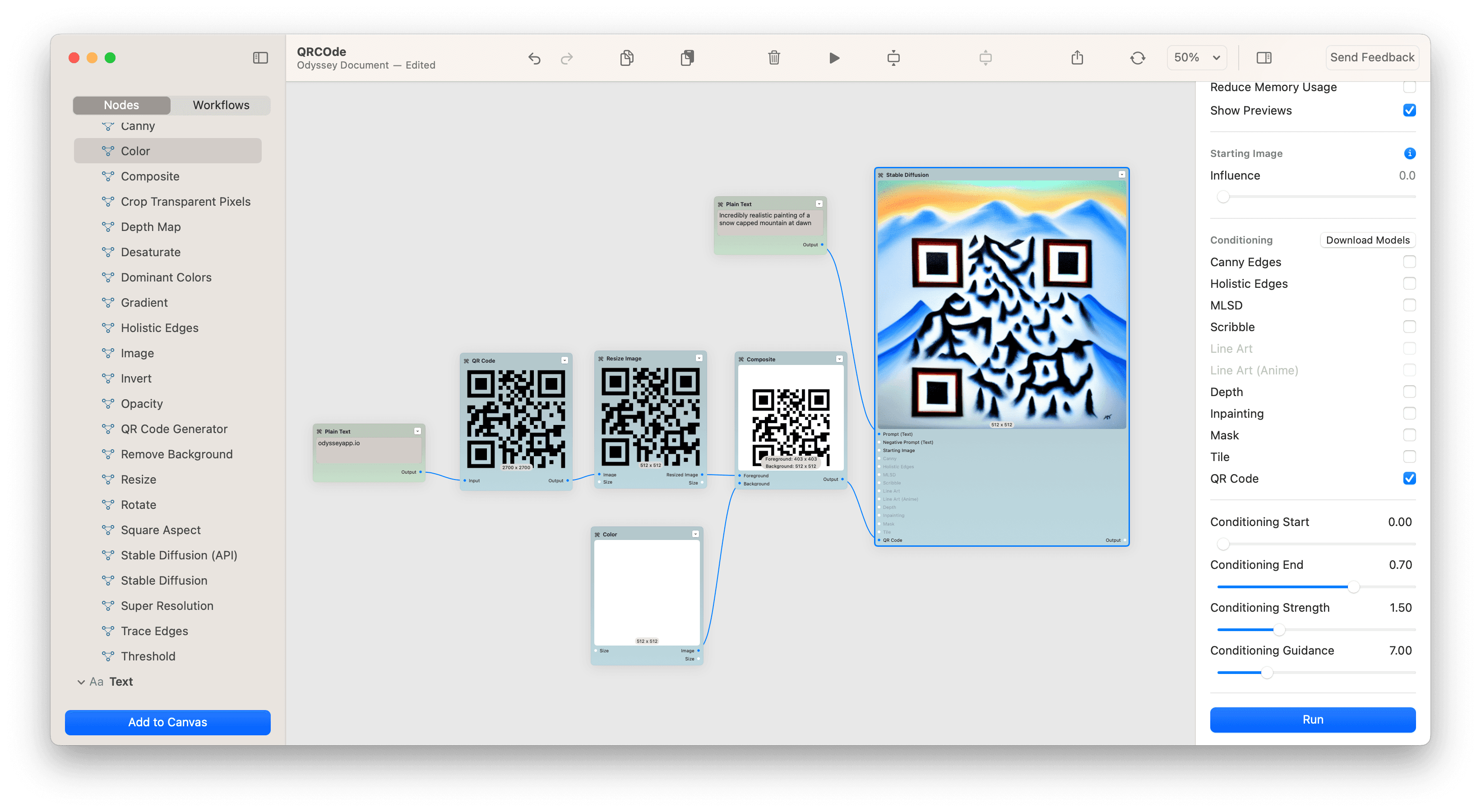

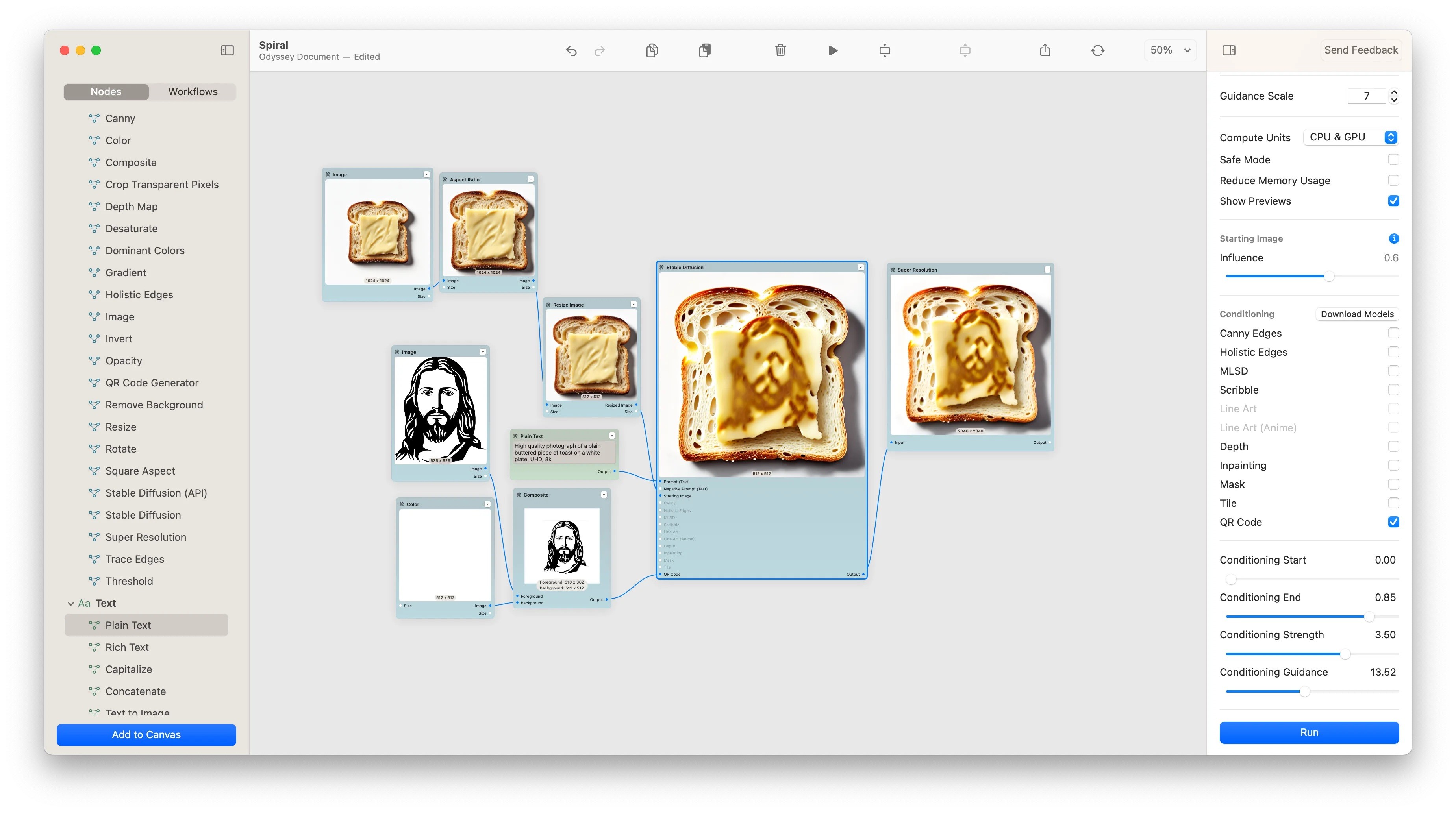

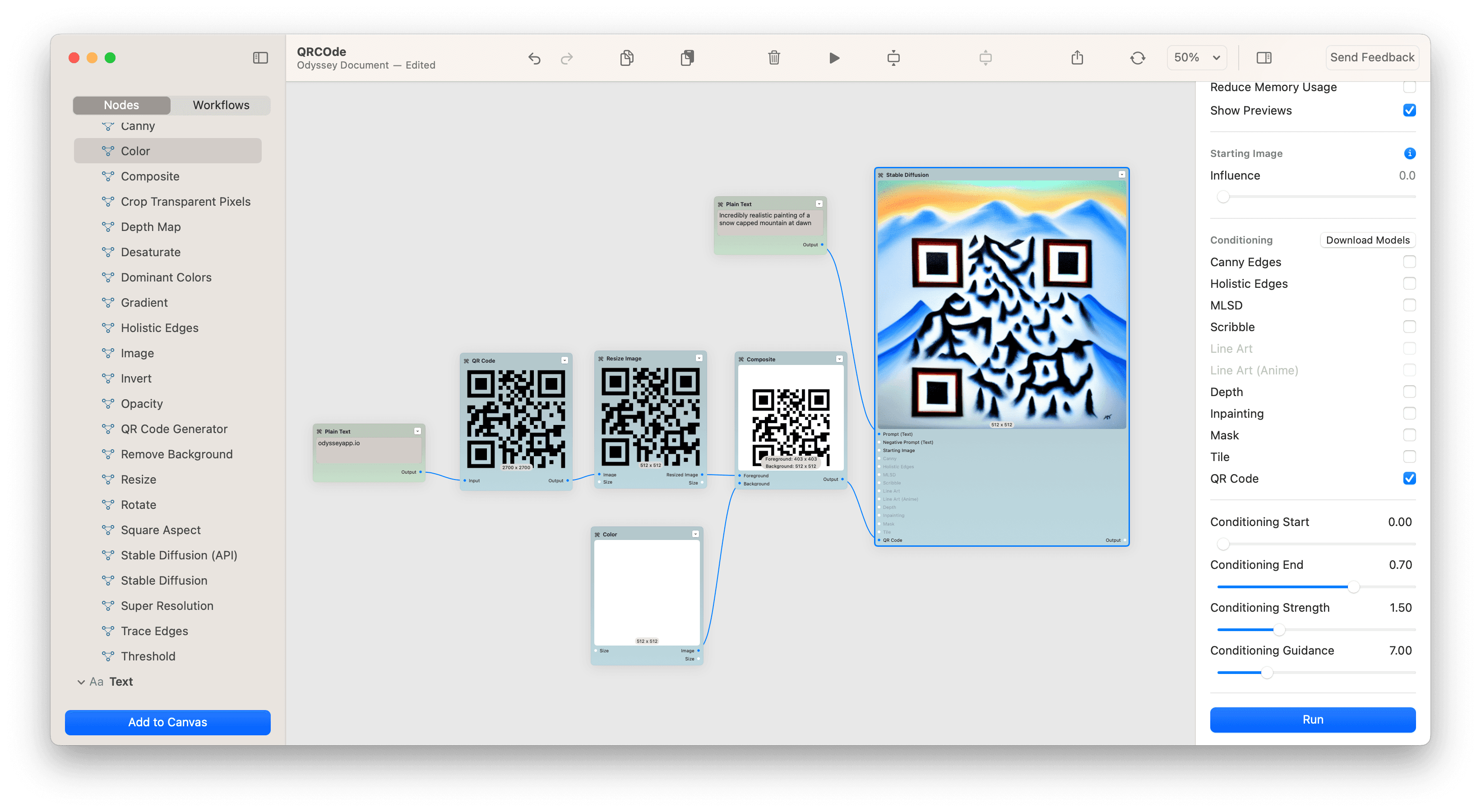

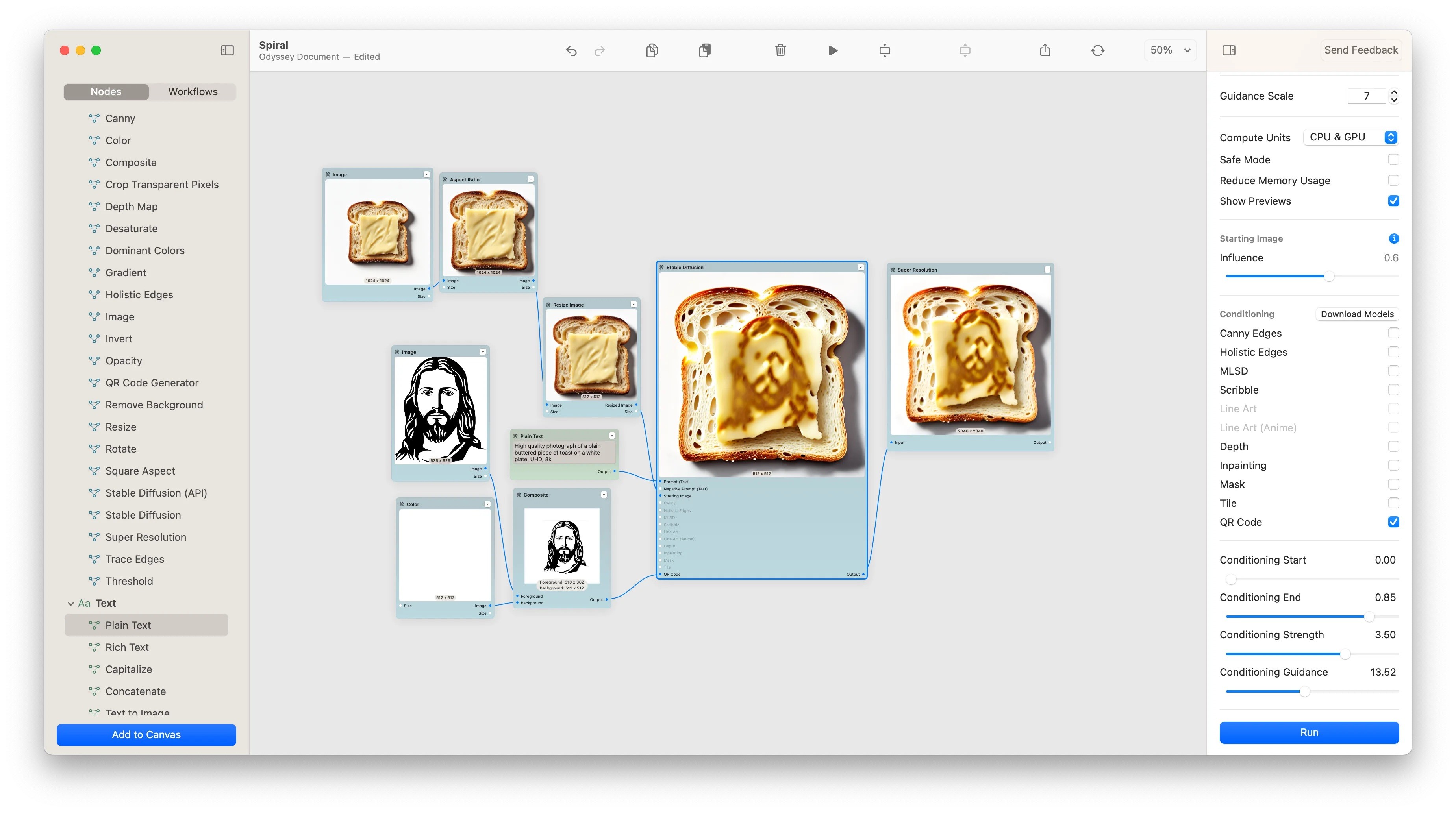

Monster QR

This versatile model embeds QR codes, patterns, and text into images. We have a complex workflow on using the QR code model to generate spiral patterns but that's really only the tip of the iceberg. The QR code model is great at, yes, QR codes - but it's also great at "hiding" figures or words within a generated image.

Coming Soon to Odyssey

OpenPose, a tool that lets you set a human figure's position, allowing Stable Diffusion to generate a matching image.

Mastering ControlNet Settings

Conditioning Start: This determines the diffusion step at which the ControlNet input initiates. Making ControlNet start later in the image will mean that more of the prompt will come through and the input will be less pronounced.

Conditioning End: This setting decides the diffusion step where the ControlNet input ceases. Like the start point, the end point can dramatically alter the image outcome by making it so the ControlNet input influences the image later in the diffusion process.

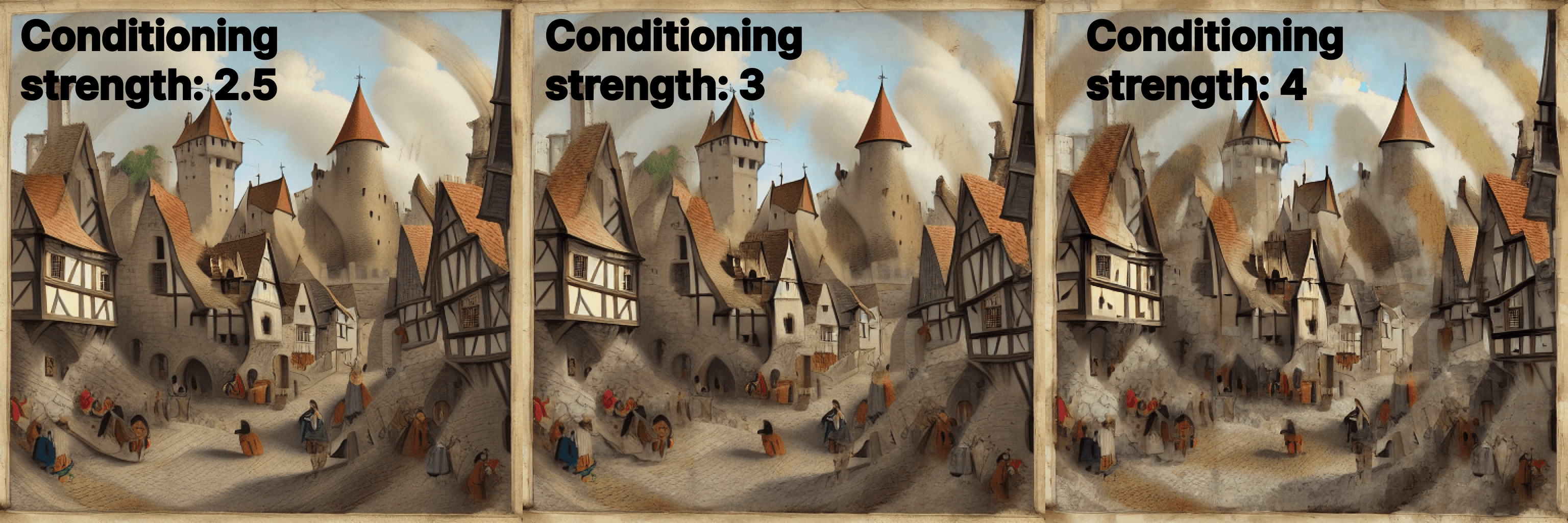

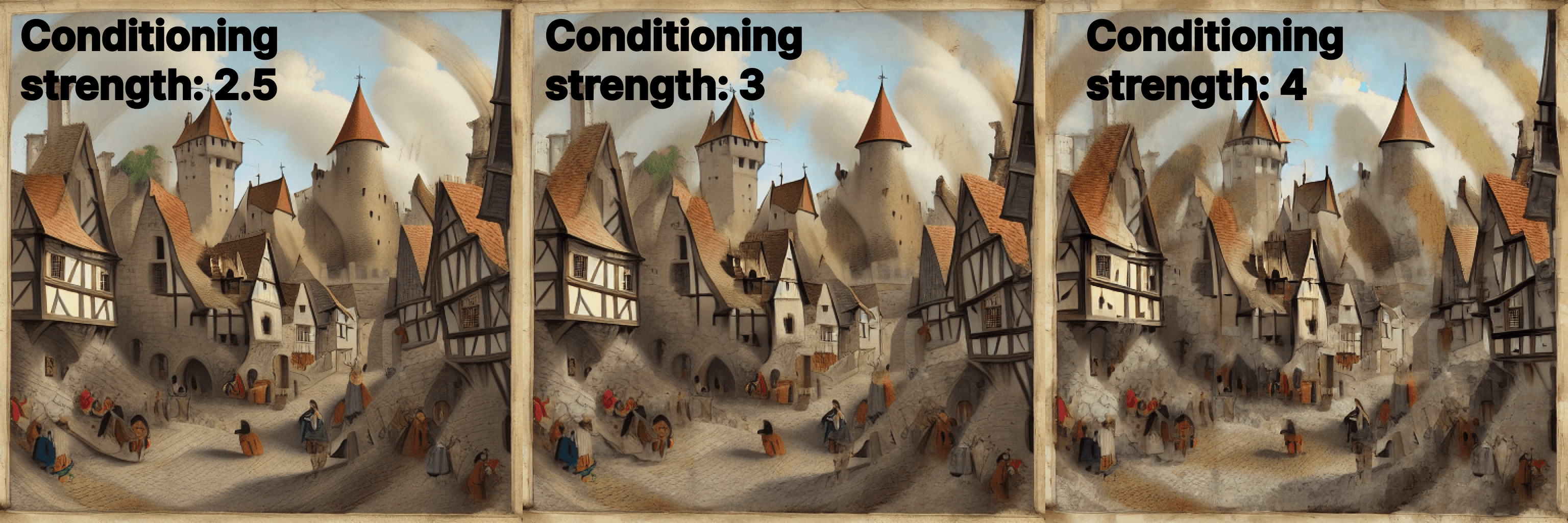

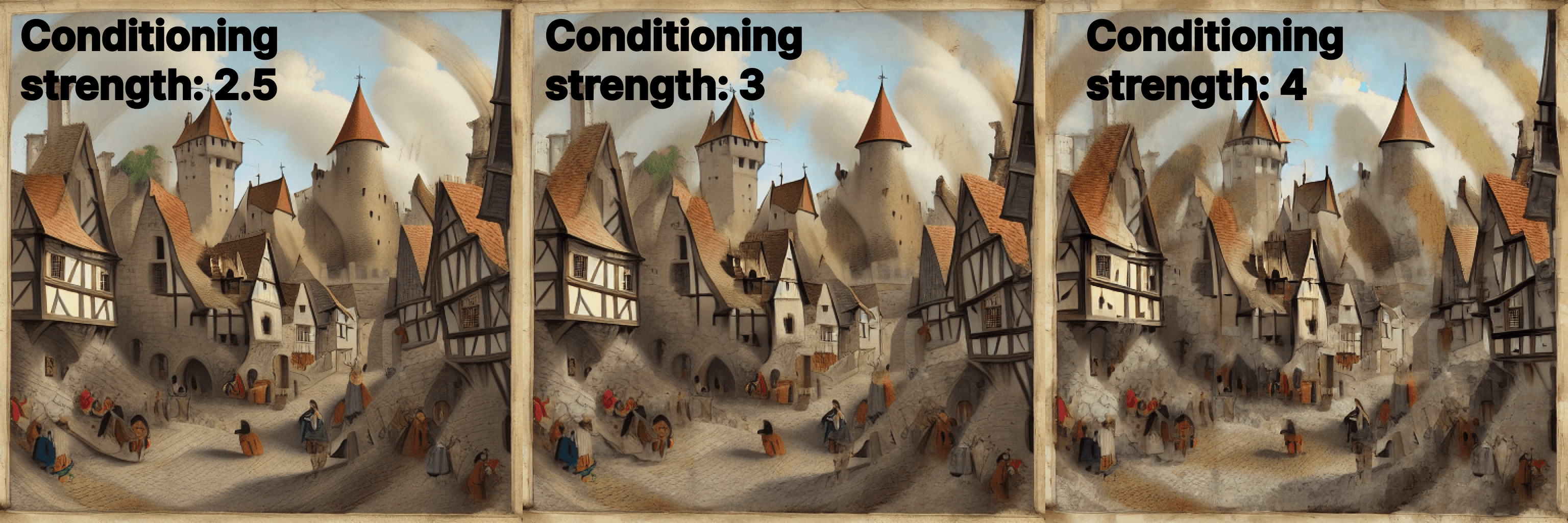

Conditioning Strength: This setting influences the ControlNet input's impact on the final image. It's sensitive, so even slight adjustments can produce varied results.

Conditioning Guidance: This determines how closely the generated image follows the ControlNet input. It's akin to prompt guidance, instructing Stable Diffusion on adhering to the input.

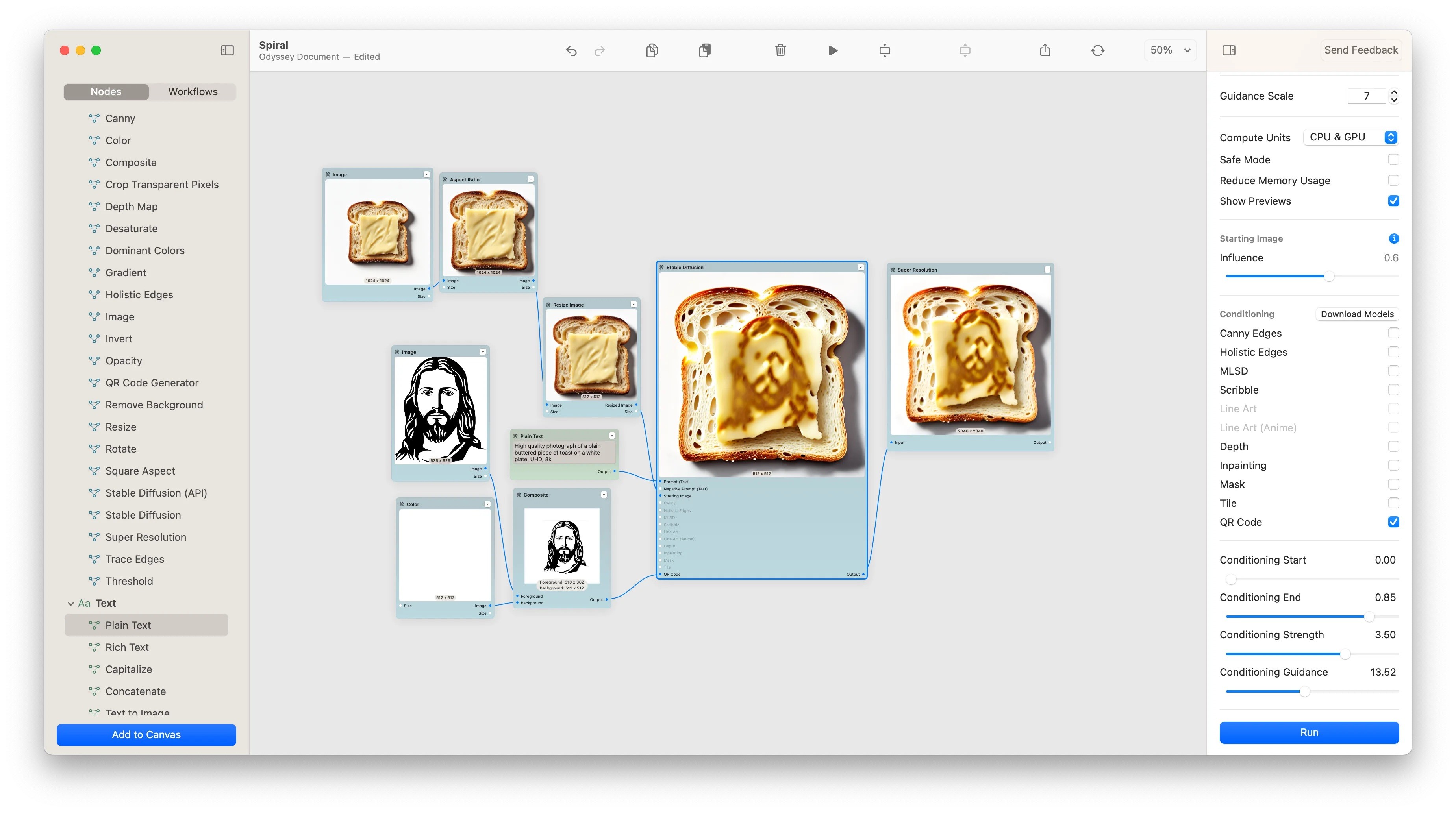

Here's an example of how these different settings can impact a "spiral" showing up with MonsterQR ControlNet.

ConclusionControlNet has undeniably revolutionized the realm of image diffusion models, offering unparalleled control and precision in generating images. With its integration into platforms like Odyssey, users can harness its full potential, crafting images that resonate with their vision. Even then, this revolution has really only just begun. In the coming weeks and months, we expect SDXL ControlNets to continue being released (and become more performant.) We'll be integrating these models into Odyssey as soon as they're available and if there's anything we're missing, feel free to reach out.

Content

ControlNet: The Complete Guide

Table of contents

What is ControlNet?

Setting up ControlNet: A step-by-step tutorial on getting started.

Exploring ControlNet Models: Covering models supported by Odyssey and other popular ones.

Understanding ControlNet Settings: How different settings impact ControlNet's output.

Workflows: Various use cases for integrating ControlNet with Odyssey.

What is ControlNet?

ControlNet is a revolutionary method that allows users to control specific parts of an image influenced by diffusion models, particularly Stable Diffusion. Technically, ControlNet is a neural network structure that allows you to control diffusion models by adding extra conditions. If you're just getting started with Stable Diffusion, ControlNet makes it so that instead of generating an image based on a vague prompt, you're able to provide the model with precise inputs, ensuring the output aligns closely with your vision.

For instance, imagine feeding your Stable Diffusion model a prompt like "photo of a blue sky with white fluffy clouds." Without ControlNet, the generated image might be a random sky with arbitrarily oriented clouds. But with ControlNet, you can craft a more specific image within those clouds.

Our primary focus will be on ControlNet 1.1 for Stable Diffusion V1.5. Stay tuned for our upcoming guide on SDXL ControlNet options for Odyssey.

Getting Started with ControlNet

For Mac Users:

We might be biased but we think Odyssey is the most user-friendly platform for Mac users to begin their ControlNet journey.

Simply launch Odyssey and select the models you wish to download, such as canny edges and Monster QR. You can always expand your collection by visiting the download section. Connecting the ControlNet node to the appropriate parameter on your Stable Diffusion node is the next step. We'll delve deeper into each model's unique inputs in the subsequent section. For those seeking an advanced experience, we'll be comparing Odyssey to Automatic1111 and ComfyUI in a separate guide.

For Windows Users:

Currently, Odyssey supports only Mac. While some WebUIs are compatible with ControlNet, we can't really recommend them. The tools are often slow or charge too much to run the models in the cloud. Assuming you have enough computing power, running models locally means you have control over both the costs and your generations. We recommend exploring Automatic1111 or ComfyUI for Windows users. Or switching to Mac 🙃

Diving into ControlNet Models

Odyssey offers a wide range of models. Let's take a look at each one we offer.

Canny Edges

This model employs the Canny edge-detection algorithm, highlighting the edges in an image. It's particularly effective for structured objects and poses, even detailing facial features like wrinkles. If you're new to Stable Diffusion, starting with Canny Edges is a great way to familiarize yourself with what ControlNet is capable of.

Here's an example of a workflow where we engrained text within the image. In this example, we took an image of the word 'Odyssey,' ran it through Canny Edges, then fed it into Stable Diffusion.

Next, here's an AI product photography workflow where we took an image of a vase, ran it through Canny Edges, then generated a new vase with the same shape onto a gradient background. This allowed us to them place the initial vase photograph on top of the generated one - giving us a realistic photography workflow.

Holistic Edges

This model uses holistically nested edge detection, producing images with softer outlines. It's adept at preserving intricate details, though it might require some adjustments for consistent results. This is especially good for keeping a person's pose and then altering their appearance slightly.

MLSD / Trace Edges

Corresponding with Odyssey’s “trace edges” node, this model retains the image’s color while drawing its edges. It's especially useful for identifying straight lines and edges. This is especially good for interiors and rooms.

Depth

This model utilizes a depth map node, producing a grayscale image that depicts the distance of objects from the camera. It's an enhanced version of image-to-image, allowing users to modify the style of an image while retaining its general composition.

Mask

This model segments the background from the main subject, ensuring the output from Stable Diffusion remains consistent. We typically use Mask as a way to augment other ControlNet models since it does an excellent job of reinforcing to the model what needs to remain in the final generation. In this example, we used img2img to get the impressionistic sunflower landscape and then Mask to follow the same pattern as the initial M.C. Escher drawing.

Tile

Ideal for images lacking detail, the Tile model adds intricate details to AI-generated images. Tile works by basically filling in the gaps of a lower resolution image. These details are often "created" - meaning that Tile will add information into the image that causes it to diverge from the initial input. We have a lot planned for Tile so stay tuned - but here's an example of the capability now.

Monster QR

This versatile model embeds QR codes, patterns, and text into images. We have a complex workflow on using the QR code model to generate spiral patterns but that's really only the tip of the iceberg. The QR code model is great at, yes, QR codes - but it's also great at "hiding" figures or words within a generated image.

Coming Soon to Odyssey

OpenPose, a tool that lets you set a human figure's position, allowing Stable Diffusion to generate a matching image.

Mastering ControlNet Settings

Conditioning Start: This determines the diffusion step at which the ControlNet input initiates. Making ControlNet start later in the image will mean that more of the prompt will come through and the input will be less pronounced.

Conditioning End: This setting decides the diffusion step where the ControlNet input ceases. Like the start point, the end point can dramatically alter the image outcome by making it so the ControlNet input influences the image later in the diffusion process.

Conditioning Strength: This setting influences the ControlNet input's impact on the final image. It's sensitive, so even slight adjustments can produce varied results.

Conditioning Guidance: This determines how closely the generated image follows the ControlNet input. It's akin to prompt guidance, instructing Stable Diffusion on adhering to the input.

Here's an example of how these different settings can impact a "spiral" showing up with MonsterQR ControlNet.

ConclusionControlNet has undeniably revolutionized the realm of image diffusion models, offering unparalleled control and precision in generating images. With its integration into platforms like Odyssey, users can harness its full potential, crafting images that resonate with their vision. Even then, this revolution has really only just begun. In the coming weeks and months, we expect SDXL ControlNets to continue being released (and become more performant.) We'll be integrating these models into Odyssey as soon as they're available and if there's anything we're missing, feel free to reach out.

Subscribe to Odyssey's newsletter

Get the latest from Odyssey delivered directly to your inbox!

Subscribe to Odyssey's newsletter

Get the latest from Odyssey delivered directly to your inbox!

Share It On:

Content

ControlNet: The Complete Guide

Table of contents

What is ControlNet?

Setting up ControlNet: A step-by-step tutorial on getting started.

Exploring ControlNet Models: Covering models supported by Odyssey and other popular ones.

Understanding ControlNet Settings: How different settings impact ControlNet's output.

Workflows: Various use cases for integrating ControlNet with Odyssey.

What is ControlNet?

ControlNet is a revolutionary method that allows users to control specific parts of an image influenced by diffusion models, particularly Stable Diffusion. Technically, ControlNet is a neural network structure that allows you to control diffusion models by adding extra conditions. If you're just getting started with Stable Diffusion, ControlNet makes it so that instead of generating an image based on a vague prompt, you're able to provide the model with precise inputs, ensuring the output aligns closely with your vision.

For instance, imagine feeding your Stable Diffusion model a prompt like "photo of a blue sky with white fluffy clouds." Without ControlNet, the generated image might be a random sky with arbitrarily oriented clouds. But with ControlNet, you can craft a more specific image within those clouds.

Our primary focus will be on ControlNet 1.1 for Stable Diffusion V1.5. Stay tuned for our upcoming guide on SDXL ControlNet options for Odyssey.

Getting Started with ControlNet

For Mac Users:

We might be biased but we think Odyssey is the most user-friendly platform for Mac users to begin their ControlNet journey.

Simply launch Odyssey and select the models you wish to download, such as canny edges and Monster QR. You can always expand your collection by visiting the download section. Connecting the ControlNet node to the appropriate parameter on your Stable Diffusion node is the next step. We'll delve deeper into each model's unique inputs in the subsequent section. For those seeking an advanced experience, we'll be comparing Odyssey to Automatic1111 and ComfyUI in a separate guide.

For Windows Users:

Currently, Odyssey supports only Mac. While some WebUIs are compatible with ControlNet, we can't really recommend them. The tools are often slow or charge too much to run the models in the cloud. Assuming you have enough computing power, running models locally means you have control over both the costs and your generations. We recommend exploring Automatic1111 or ComfyUI for Windows users. Or switching to Mac 🙃

Diving into ControlNet Models

Odyssey offers a wide range of models. Let's take a look at each one we offer.

Canny Edges

This model employs the Canny edge-detection algorithm, highlighting the edges in an image. It's particularly effective for structured objects and poses, even detailing facial features like wrinkles. If you're new to Stable Diffusion, starting with Canny Edges is a great way to familiarize yourself with what ControlNet is capable of.

Here's an example of a workflow where we engrained text within the image. In this example, we took an image of the word 'Odyssey,' ran it through Canny Edges, then fed it into Stable Diffusion.

Next, here's an AI product photography workflow where we took an image of a vase, ran it through Canny Edges, then generated a new vase with the same shape onto a gradient background. This allowed us to them place the initial vase photograph on top of the generated one - giving us a realistic photography workflow.

Holistic Edges

This model uses holistically nested edge detection, producing images with softer outlines. It's adept at preserving intricate details, though it might require some adjustments for consistent results. This is especially good for keeping a person's pose and then altering their appearance slightly.

MLSD / Trace Edges

Corresponding with Odyssey’s “trace edges” node, this model retains the image’s color while drawing its edges. It's especially useful for identifying straight lines and edges. This is especially good for interiors and rooms.

Depth

This model utilizes a depth map node, producing a grayscale image that depicts the distance of objects from the camera. It's an enhanced version of image-to-image, allowing users to modify the style of an image while retaining its general composition.

Mask

This model segments the background from the main subject, ensuring the output from Stable Diffusion remains consistent. We typically use Mask as a way to augment other ControlNet models since it does an excellent job of reinforcing to the model what needs to remain in the final generation. In this example, we used img2img to get the impressionistic sunflower landscape and then Mask to follow the same pattern as the initial M.C. Escher drawing.

Tile

Ideal for images lacking detail, the Tile model adds intricate details to AI-generated images. Tile works by basically filling in the gaps of a lower resolution image. These details are often "created" - meaning that Tile will add information into the image that causes it to diverge from the initial input. We have a lot planned for Tile so stay tuned - but here's an example of the capability now.

Monster QR

This versatile model embeds QR codes, patterns, and text into images. We have a complex workflow on using the QR code model to generate spiral patterns but that's really only the tip of the iceberg. The QR code model is great at, yes, QR codes - but it's also great at "hiding" figures or words within a generated image.

Coming Soon to Odyssey

OpenPose, a tool that lets you set a human figure's position, allowing Stable Diffusion to generate a matching image.

Mastering ControlNet Settings

Conditioning Start: This determines the diffusion step at which the ControlNet input initiates. Making ControlNet start later in the image will mean that more of the prompt will come through and the input will be less pronounced.

Conditioning End: This setting decides the diffusion step where the ControlNet input ceases. Like the start point, the end point can dramatically alter the image outcome by making it so the ControlNet input influences the image later in the diffusion process.

Conditioning Strength: This setting influences the ControlNet input's impact on the final image. It's sensitive, so even slight adjustments can produce varied results.

Conditioning Guidance: This determines how closely the generated image follows the ControlNet input. It's akin to prompt guidance, instructing Stable Diffusion on adhering to the input.

Here's an example of how these different settings can impact a "spiral" showing up with MonsterQR ControlNet.

ConclusionControlNet has undeniably revolutionized the realm of image diffusion models, offering unparalleled control and precision in generating images. With its integration into platforms like Odyssey, users can harness its full potential, crafting images that resonate with their vision. Even then, this revolution has really only just begun. In the coming weeks and months, we expect SDXL ControlNets to continue being released (and become more performant.) We'll be integrating these models into Odyssey as soon as they're available and if there's anything we're missing, feel free to reach out.

Try Odyssey for free

Download for Mac